Making a legacy cloud-native, the Skyloud testimonials

Discover how Mon Petit Placement, a french startup, migrated their infrastructure to the cloud, and more particularly on Kubernetes and how they made their legacy cloud-native.

A year ago, WeScale had the idea to create a coding game oriented towards DevOps and Infra-as-Code: a treasure hunt that could amuse experts and push beginners to improve their skills by solving technical puzzles.

The Abhra Shambhala project started in March 2021, driven by the passion for sharing technical knowledge in a fun way that also provides a challenge for participants, as well as for us... At the time of writing this article, 235 people have already taken part in our treasure hunt, 22 have reached the finish line.

Let's take a look at the project in more detail, without spoiling the game.

The primary goal of the game is to familiarize players with git, Docker, Helm manifests, and automated deployment pipelines.

To build a successful coding game platform, we needed to prevent users from being able to modify the build from pull requests for (obvious) security reasons and deploy/destroy the platform quickly. Continuous deployment on our application components was also a must-have to deliver quickly in case of problems. Spoiler alert: there are always problems when building a technical treasure hunt game.

We also obviously wanted to have fun while testing a complete stack with a concrete project and bring players into this fun story.

The toolbox used to deploy and maintain this architecture is composed of:

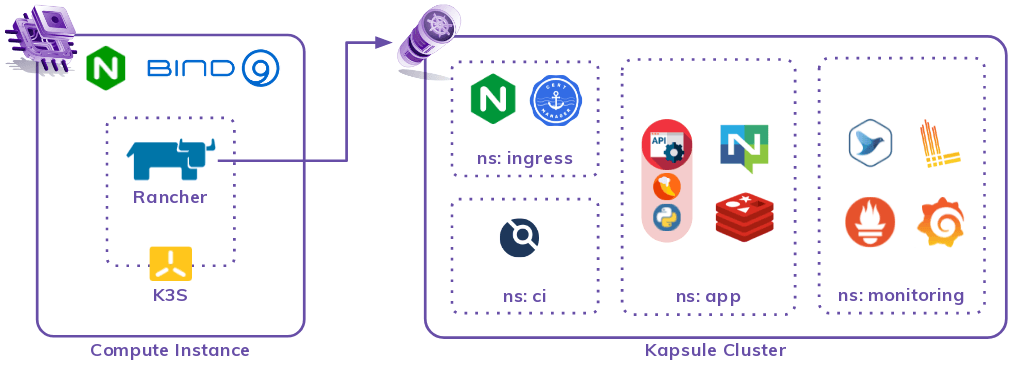

The final form of our architecture here revolves around two primary resources: a compute instance and a Kapsule cluster.

The cornerstone of the platform is a simple Instance running on Debian 11 with:

For more flexibility in deployments, we delegated a subdomain to a bind9 daemon that will become our reference DNS authority. The DNS records of application deployments that we expose are managed here, with updates pushed by an Ansible playbook.

We installed Rancher on this server rather than on Kapsule to allow an easier switch between Kapsule clusters without reinstalling Rancher.

Rancher is deployed by Helm charts, and we maintain a local cluster with a single K3S instance to serve as Rancher’s execution platform.

To expose the Rancher service, we installed Nginx to act as a reverse proxy to a local port and carry the TLS certificates. K3S APIs are not exposed externally and are only accessed locally via Ansible.

Once Rancher is deployed, any cluster imported into its management scope can receive Rancher tooling, an observability stack detailed in the next section.

Now that the back base has been deployed, we can start a Kapsule Kubernetes cluster through an Ansible playbook that pilots Terraform to create the cluster, retrieve useful output and launch a second action to implement the cluster on Rancher.

Rancher deploys its probes and graphic interface to inspect the cluster when imported. This gives us the tools to visualize workloads’ logs from each pod and start terminals on each for troubleshooting.

A final Terraform piloting playbook is used to deploy:

Phew! Once all this has been deployed, we have a sound and well-equipped working base to accommodate the application part. We will not detail here the content of the application so as not to disclose the CodinGame to future participants!

We’ll just tell you that there is a continuous deployment component with a Drone (which does the job brilliantly).

Automation and reproducibility are central to our work. Once the code base is mature, an environment can be set up with two commands that follow a certain number of playbooks.

`make core`

`make kapsule`

Installing one’s own Kubernetes cluster is a hard path to follow. Therefore, a managed K8s orchestrator is the obvious choice. Kubernetes Kapsule is a great choice with:

The Ansible-Terraform duo for Infra-as-Code management is a real success, even if the encapsulation of Terraform by playbooks may seem counterintuitive.

In this context, where the scope is clearly defined and involves many heterogeneous tasks, Ansible as an entry point makes it more accessible.

Ansible is an excellent glue for all that, and the Ansible Terraform module fits right in.

The GitOps component from RancherLabs, Fleet, still seems young to us.

The proposed Custom Resources Definition abstraction for managing continuous deployment flows and targets is somewhat complex to grasp. Redeployments can quickly go wrong if there are too many changes from one Helm chart release to the next.

Multiple cryptic error messages on redeployments prompted us to revisit our copy and remove it from the stack in favor of Drone pipelines.

For now, Fleet is a no-go for us, but we hope that will change in the future.

Light, easy to deploy and configure, very nice graphical rendering to visualize dependencies between tasks, parallelizations, etc.

Since the acquisition of Drone by Harness in 2020, the tool has only gained maturity, functionality, and design.

There is a good library of pre-made CI actions, and producing your own to make it available to your teams is effortless. It was a good experience, and we recommend Drone CI.

The installation’s automation is straightforward, and the Terraform connector configuration dramatically facilitates the implementation. The use of Rancher in this project was not very advanced, but it is clearly a good tool for managing Kubernetes clusters, even ones created outside of Rancher.

The big plus is having a path mapped out for observability tooling. We recommend it.

There is nothing special here to say, except that everything works as explained in the documentation, and it's enjoyable! The Terraform provider makes life more accessible, and the cloud resources are simple enough to get started quickly.

The assembly’s automation of this platform, apart from the application, took about ten full days. It was sometimes frustrating, but setting a fundamental operational objective rather than just testing for fakes is a great learning experience.

It was a beautiful adventure, which was much cooler and more motivating to share once finished.

If you feel like it, you can deploy your own Instance of this architecture and make up your own mind by following this tutorial.

WeScale helps companies through their technical expertise, but soft skills and continuous improvement are central to its identity. The company has built a strong culture around sharing. With more than 50 people based in France, they support companies becoming cloud-native. WeScale's mission is to help their clients think, build, and master their infrastructure and cloud applications.

Discover how Mon Petit Placement, a french startup, migrated their infrastructure to the cloud, and more particularly on Kubernetes and how they made their legacy cloud-native.

Kilian & Sylvia shared their experience as CTO: what they learned along their journeys and how they optimized their infrastructure. Here is a cheat sheet of their best practices.

If you want to quickly and easily set up a cloud infrastructure, one of the best ways to do it is to create a Terraform repository. Learn the basics to start your infrastructure on Terraform.