When we started building a Serverless product at Scaleway two years ago, we first thought about providing a single Serverless product: easy to install and get your application up and running. We then decided to split it into two products to allow more flexibility around the configuration of your Serverless environment while keeping this simple process to get a container on demand without having to manage your server.

Our Serverless platform enables you to deploy your Functions and Containerized applications in a managed infrastructure.

The rise of containerization vs. Virtual Machines

With the rise of the cloud came containerization that became the preferred way of packaging, deploying, and managing cloud applications. It enables you to separate your applications from your infrastructure to deliver software faster.

Compared to Virtual Machines, Containers allow you to spare system resources as they don’t include the OS images. They also enable portability to deploy on many different OS and hardware platforms and a gain of consistency. Indeed, your applications always run the same regardless of where they are deployed, which in the end will accelerate development, test, and production cycles.

The end goal is to accelerate how an application is being deployed, patched, and scaled.

Containers are an excellent solution to many problems and needs for a faster environment. However, they still need to be configured and managed - which can be a whole task when you need to develop your application.

What is Serverless Containers?

Serverless Containers provides a scalable managed compute platform to run your application. Scaleway will set up your containers so they can run your application no matter the language you choose to use for your app or library.

Engineers can focus on building applications and accelerating deployment and leave the container deployment and scheduling, plus the cluster configuration, to Serverless Containers that manage all of this.

If you have or aim to have a microservice approach, solutions such as Containers services can be a smooth first step towards that transition without disturbing your existing projects.

The advantages of Kubernetes without the YAML

Suppose you've ever wanted to enjoy the benefits of Kubernetes without having to deal with the heavy configuration or the learning curve of that powerful yet complex solution. In that case, Serverless Containers could be an excellent answer to that need.

This solution works particularly when you need to host a containerized web app with scaling needs, serve your API using the backend of your choice, or process data or multimedia.

As you can just as easily add or remove resources, you will be able to gain absolute control over your resource consumption - and therefore your bill - as containers are only executed when an event is triggered, allowing users to optimize and save money when no code is running.

Our Serverless products have a free tier to let you get started, test our platform, and discover which tools best fit your needs.

How do Serverless Containers work?

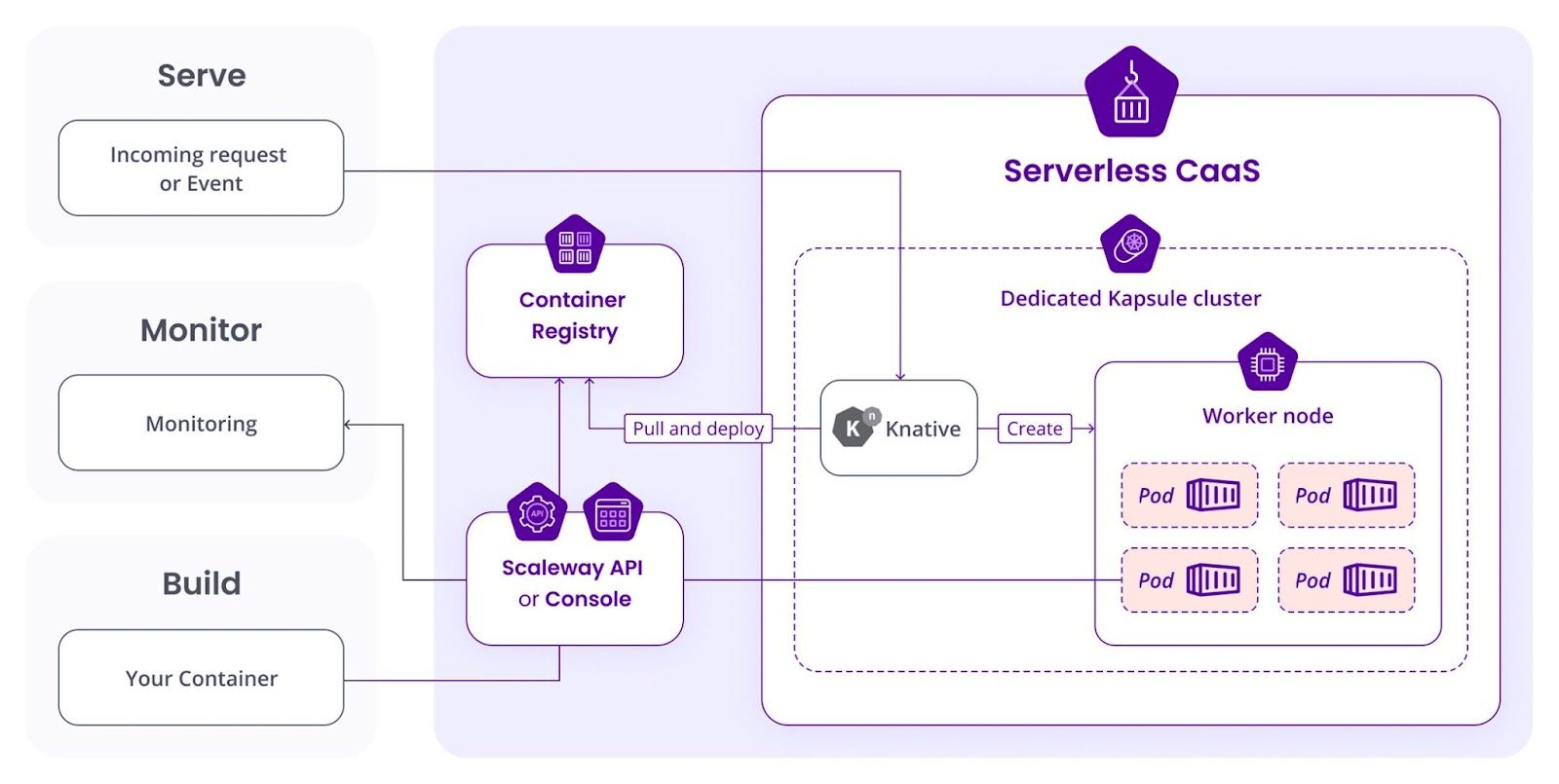

We built Serverless Containers with Knative components on top of running Kubernetes to abstract away the intricate details of the configuration and to enable developers to focus on their software while having their infrastructure up and running.

Knative is an open-source Kubernetes-based platform used to build, deploy, and manage modern Serverless workloads. It has been codified with the best practices from demanding, real-life cases.

With Serverless Containers, you’ll be able to choose the OS image and the language of your software. We will take care of its deployment and scaling - whether up or down - according to incoming demand.

You will be able to manage your container through the Scaleway Console that interfaces with Knative and Kubernetes through an API (developed by Scaleway) and to manage your product through HTTP calls.

Setup your first Serverless Containers

If you want to enjoy our free tier to set up your first application and get started on Serverless Containers, go on your Scaleway Console and follow those simple steps:

- Choose an image from your Container Registry

- Choose a name for Your Container

- Choose the resources that will be allocated to your container at runtime

- Scaling: our platform auto-scales the number of available instances on your container to match the incoming load. A new instance will be created if the traffic exceeds the maximum number of concurrent executions configured.

- Environment variables that will be injected in your container with the namespace's variables

- Choose your container's privacy policy.

It defines whether container invocation may be done anonymously or via an authentication mechanism provided by Scaleway. You’ll simply have to choose between Public or Private.

- Estimated cost: we’ll summarize the estimated cost based on your configuration, the amount of time you expect to use the resource, and the scale of your expected usage. Note that one month equals 730 hours.

Learn more

If you have any questions or remarks, like always, you are welcome to join our Scaleway Slack Community or ping us in our live chat (free live chat article link).

Here’s a simple tutorial on how to get started with Serverless Containers to create your first Serverless Containers.

And here's one on how to manage Containers.