Generative AI (GAI) has been the talk of the town since ChatGPT exploded late 2022. But it’s not the only type of artificial intelligence. Symbolic AI is also known as Good Old-Fashioned Artificial Intelligence (GOFAI), as it was influenced by the work of Alan Turing and others in the 1950s and 60s. And yet, it remains relevant today.

Notably because symbolic AI is far less resource-intensive than GPU-heavy GAI. It can therefore work perfectly with standard CPUs, and hence more cheaply. As it uses a pre-established set of rules and symbols to represent and manipulate data, it doesn’t need training. It is fully capable of functions like natural language processing — think Siri, or Alexa — or of knowledge management applications, like filtering emails.

So let’s take a look at why and how symbolic AI is still widely used today, “old-fashioned” as it may be .

How Symbolic AI remains relevant today

So called because it relies on rules and symbols to make “if this then that”-type determinations, symbolic AI effectively began in 1959, with Herbert Simon, Allen Newell and Cliff Shaw, who aimed to build a computer that could solve problems in a similar way as our brains do. Together, they built the General Problem Solver, which uses formal operators via state-space search using (the principle which aims to reduce the distance between a project’s current state and its goal state).

Jump ahead to today, and symbolic AI remains widely used, across four main case types:

- Expert systems: AI applications that use knowledge and rules to mimic the decision-making process of human experts in a specific domain.

Usages include medical diagnosis — for example, for detecting tumors — financial analysis, and customer service.

- Natural Language Processing (NLP): AI applications that can understand and generate human language.

Usages cover language translation, text analysis and speech recognition, as with Apple’s Siri, for example.

- Robotics: AI apps powering robots of many kinds.

Usages include robots that can navigate in an unknown environment, avoid obstacles, and interact with humans.

- Knowledge representation: Symbolic AI can be used to represent knowledge in a structured and logical way.

Usages can be applications such as databases and knowledge management systems, like Golem.ai’s email sorting solution, InBoxCare (below).

Just as naturally, symbolic AI has its limits. Facial recognition, for example, is impossible, as is content generation.

But let’s stay focused on what it can do: you might be surprised!

Why CPU beats GPU for AI efficiency

Unlike ML, which requires energy-intensive GPUs, CPUs are enough for symbolic AI’s needs. This means symbolic AI is considerably more frugal than GAI.

In ML, knowledge is often represented in a high-dimensional space, which requires a lot of computing power to process and manipulate. In contrast, symbolic AI uses more efficient algorithms and techniques, such as rule-based systems and logic programming, which require less computing power.

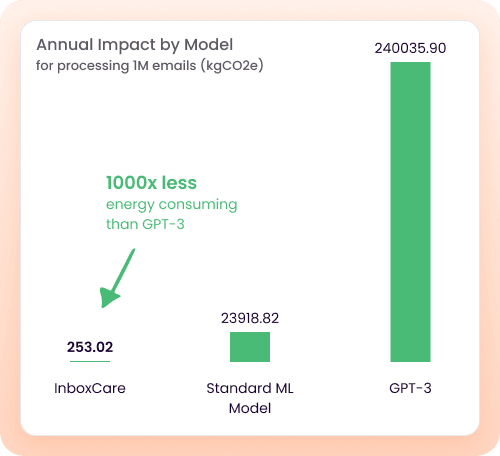

Symbolic AI needs, on average, 143 times less energy than a classic machine learning (ML) model. And this naturally translates to less emissions. French startup Golem.ai has notably established that one of their email-sorting AI models emits 1000 less CO2eq than GPT-3:

This impact is further reduced by choosing a cloud provider with data centers in France, as Golem.ai does with Scaleway. As carbon intensity (the quantity of CO2 generated by kWh produced) is nearly 12 times lower in France than in the US, for example, the energy needed for AI computing produces considerably less emissions. More on AI’s environmental impact here.

How Golem.ai uses Symbolic AI

Golem.ai uses Natural Language Processing (NLP) to perform tasks such as creating a spreadsheet or dashboard from a 300-page document, or automatically sorting and forwarding emails to the right person. This naturally leads to considerable time savings.

“One of our clients has about 15,000 emails coming in every day on its main inbox”, says Golem.ai CEO Killian Vermersch. “One customer might try to order something, another may be asking about pricing. These are two very similar emails, but they’re not processed in the same way. If the customer wants to order one specific thing, our AI will search the catalog to see if what they want is there. And then prepare that for the sales team. All while keeping the human in the loop; that's quite important to us.”

As such, Golem.ai applies linguistics and neurolinguistics to a given problem, rather than statistics. Their algorithm includes almost every known language, enabling the company to analyze large amounts of text. And it does so whilst using minimal resources. Notably because unlike GAI, which consumes considerable amounts of energy during its training stage, symbolic AI doesn’t need to be trained.

Golem.ai also leans on external data, including from Scaleway, to tell clients “on a day to day basis how much CO2 equivalent we produce, and how much we save,” says Vermersch. “We want the market to move in a frugal direction. We actually need this planet to work in order to do business!”

Furthermore, unlike GAI companies like OpenAI, Golem.ai is totally transparent about how its symbolic AI works, and openly provides data on how its AIs come up with their results. Responsible and accountable!

How other companies use Symbolic AI

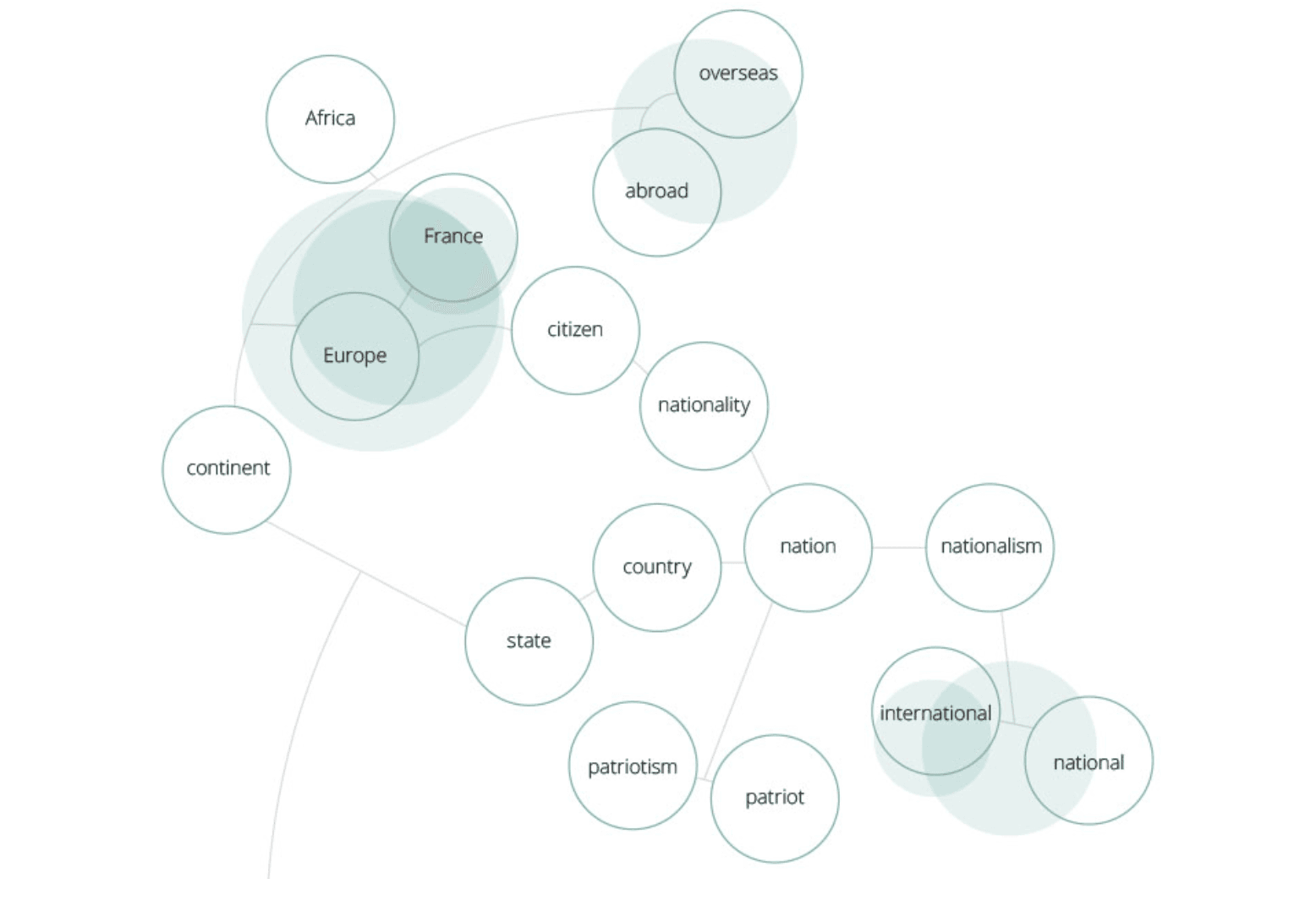

Some companies have chosen to ‘boost’ symbolic AI by combining it with other kinds of artificial intelligence. Inbenta works in the initially-symbolic field of Natural Language Processing, but adds a layer of ML to increase the efficiency of this processing. The ML layer processes hundreds of thousands of lexical functions, featured in dictionaries, that allow the system to better ‘understand’ relationships between words. It calls this approach neuro-symbolic AI.

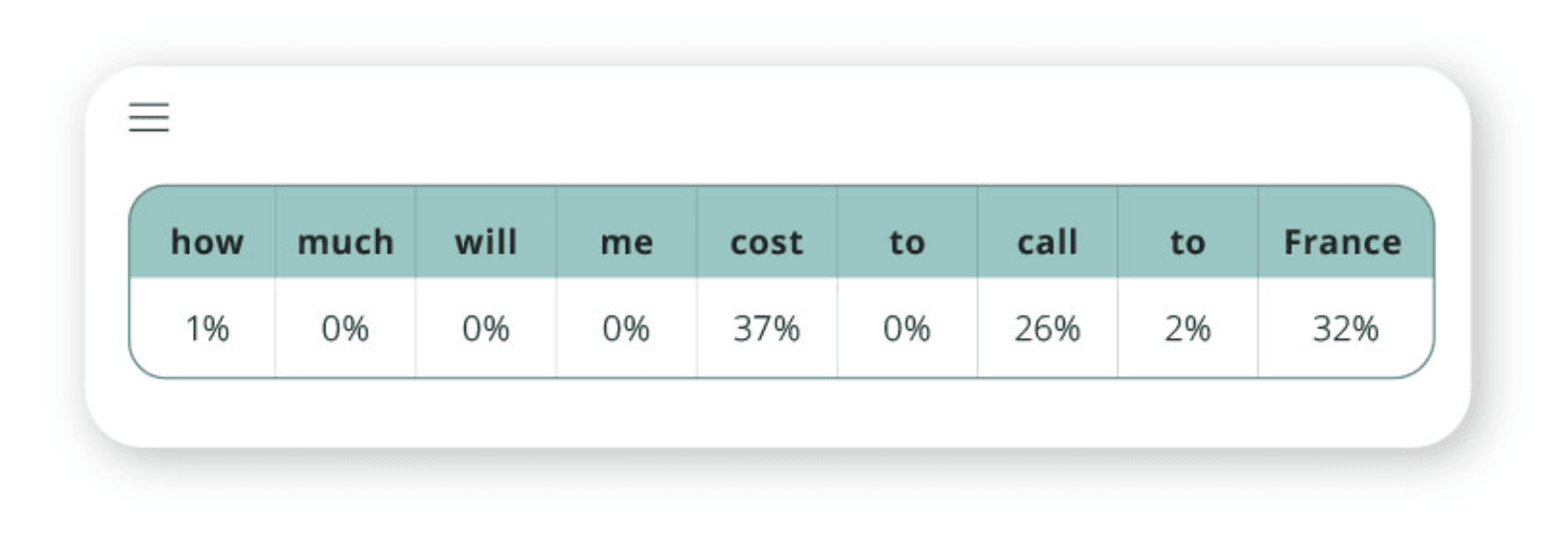

So, as Inbenta explains here, if someone types “how much wll me cost to call to francw” (typos deliberate) into a chatbot, Inbenta’s systems will first analyze the request like this, giving semantic weighting percentages to pick out those words with the most meaning in the query:

Then the algorithm works out the relationships between words, based on the aforementioned dictionaries, to establish their meanings:

This will give a “Semantic Coincidence Score” which allows the query to be matched with a pre-established frequently-asked question and answer, and thereby provide the chatbot user with the answer she was looking for.

For Inbenta, neuro-symbolic AI is both transparent and frugal. We know how it works out answers to queries, and it doesn’t require energy-intensive training. This aspect also saves time compared with GAI, as without the need for training, models can be up and running in minutes.

Equally cutting-edge, France’s AnotherBrain is a fast-growing symbolic AI startup whose vision is to perfect “Industry 4.0” by using their own image recognition technology for quality control in factories. Specifically, “we learn what a perfect component is, so that our tech can identify defaults automatically, without needing to refer to extensive product libraries, which can be very complex for industrial players to obtain,” the company’s Charlotte Senhadji told BPI earlier this year.

Like Inbenta’s, “our technology is frugal in energy and data, it learns autonomously, and can explain its decisions”, affirms AnotherBrain on its website. And given the startup’s founder, Bruno Maisonnier, previously founded Aldebaran Robotics (creators of the NAO and Pepper robots), AnotherBrain is unlikely to be a flash in the pan.

Why Symbolic AI is here to stay

So not only has symbolic AI the most mature and frugal, it’s also the most transparent, and therefore accountable. As pressure mounts on GAI companies to explain where their apps’ answers come from, symbolic AI will never have that problem.

And that makes sense not only for ethical reasons, but also for technical ones. Symbolic AI’s explainability “also has a really amazing side-effect where it makes it much easier to fix bugs”, says Golem.ai’s Vermersch. Because you want to have explicit information telling you ‘we understood this word as this’ [so there’s a possibility for a human to see that mistake] and go and say ‘you're completely wrong, so we need to fix this’.”

Could symbolic AI’s example as such encourage GAI to become more frugal and accountable? Vermersch points to recent developments like Meta’s LLaMA being “much more lightweight than GPT”, which is a start. He adds that OpenAI’s Sam Altman has said his company’s focus moving forwards will be on making models better, not bigger. “Things are going in the right direction,” he concedes, “but too slowly. A lot of data is being thrown around. Plus there’s been a lot of debate around who owns what’s generated with ChatGPT. Current answers are a mess. Whereas at Golem.ai, we don't even have an incentive to use [sell] the data from one customer to another.”

What if symbolic AI was, in certain use cases, the ideal way to unlock the power of AI without breaking the bank, using excessive resources, with the added bonus of always being able to explain its answers? Give any of the above three startups a try and find out…

Find out more about the latest AI trends at Scaleway’s exclusive AI conference, ai-PULSE, November 17 at Station F! More info here…