Understanding SSH bastion: use cases and tips

To launch an application in the cloud, you need to be able to access the servers on which it is hosted. We will learn how to do that in this article.

As a Professional Services Consultant at Scaleway, I help companies wishing to migrate to Scaleway, as part of the Scale Program launched earlier this year, in which my team plays a key role. We provide clients with ready-to-use infrastructure, and with the skills they need to make the change, to allow them to become autonomous later on.

Our first client was Kanta, a startup founded two years ago, which allows accountants to prevent money-laundering, and to automatize previously time-intensive tasks. Their technical team’s priority is to develop their application, not their infrastructure. This is why they asked the Scale Program to help them with their transfer.

The actions carried out during this mission are the result of work between several teams within Scaleway: the Solutions Architects, the Key Account Manager, as well as the Startup Program team, which supports startups.

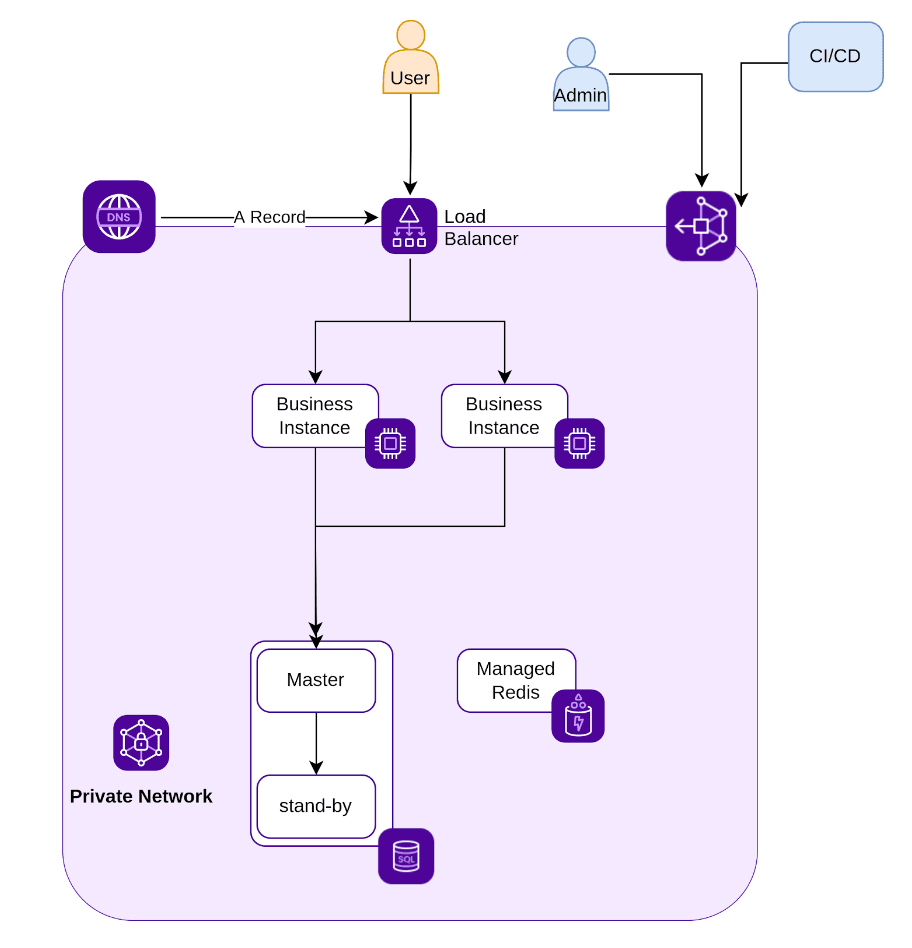

In this article, I will share with you the Terraform templates that were developed to enable this migration, and the implementation, via Terraform, of the bastion, the database, the instances, the Object Storage bucket and the load balancer.

To integrate a customer into the Scale program, we first had to define their needs, in order to understand how we can help them. Based on the target architecture recommended by the Solutions Architect, we defined the elements necessary for a Scaleway deployment.

When we work for a client, we focus on the tools we will use. The choice of infrastructure-as-code tools is central to our approach, because they guarantee the reproducibility of deployments, and simplify the creation of multiple identical environments.

Since the client was not yet familiar with Terraform, we decided together to start with a simple and well-documented code. Our service also includes skills transfer, so we made sure to focus on the Terraform aspect, to ensure that the client's teams can become autonomous afterwards.

The application that Kanta wished to migrate from OVH to Scaleway was a traditional application composed of:

Taking into account these needs, we proposed a simple architecture that meets these needs, while allowing an isolation of resources through the use of a Private Network. The customer will be able to access their machines thanks to an SSH bastion. In order to limit administrative tasks, we advised the customer to use a managed MySQL database and a managed Redis cluster.

Here, the customer already has a Scaleway account that they use to do tests. We therefore decided to create a new project within this account. This allows us to segment resources and accesses, depending on the environment.

I started working on the Terraform files that allow me to deploy the development environment. To do this, I worked incrementally, starting from the lowest layers and adding elements as I go along.

During our discussions with the client, we agreed on several things:

Firstly, the client wanted all of its data to be stored in the Paris region

Secondly, in order to perpetuate their Terraform status file, we decided to store it inside an Object Storage bucket

Finally, we decided to use the most recent version of Terraform, so we decided to add a strong constraint on the Terraform version.

When we take these different constraints into account, we end up with a providers.tf file, which looks like this:

terraform { required_providers { scaleway = { source = "scaleway/scaleway" } } // State storage backend "s3" { bucket = “bucket_name” // Path in the Object Storage bucket key = "dev/terraform.tfstate" // Region of the bucket region = "fr-par" // Change the endpoint if we change the region endpoint = "https://s3.fr-par.scw.cloud" // Needed for SCW skip_credentials_validation = true skip_region_validation = true } // Terraform version required_version = ">= 1.3"}This file allowed us to start deploying resources. As we saw earlier, the client is not familiar with Terraform and therefore wants to deploy the different environments themselves.

In order to make sure that everything works well, I still needed to deploy my resources as I work. So I used a test account with a temporary Object Storage bucket to validate my code.

Importantly, the bucket allowing us to store our Terraform states had to be created by hand in another project before we could make our first terraform run.

In the interests of simplicity, I decided to create a Terraform file for each type of resource, and so I ended up with a first project.tf file. This file is very short, it only contains the code for the creation of the new project:

resource "scaleway_account_project" "project" { provider = scaleway name = “Project-${var.env_name}"}In order to guarantee the reusability of the code, the project creation code requires a env_name variable, so I created a variables.tf file, in which I can put my first variable:

variable "env_name" { default = "dev"}This env_name variable is important, because we will use it in most resource names. This information may seem redundant, but with the use of multiple projects, it would be easy to make a mistake and find yourself in the wrong project without realizing it. Using the name of the environment in the name of the resources limits the risk of errors.

Since we are creating a new project, we need to attach to it the public SSH keys that allow the client's teams to connect to their machine via the Public Gateway SSH bastion. So I created a new ssh_keys.tf file, where I can add the client's public SSH keys:

// Create Public SSH Keys and attach them to the Projectresource "scaleway_iam_ssh_key" “ci-cd” { name = “ci-cd” public_key = “ssh-ed25519 xxxxxxxx” project_id = scaleway_account_project.project.id}resource "scaleway_iam_ssh_key" “user-admin” { name = “user-admin” public_key = “ssh-ed25519 xxxxxxxx” project_id = scaleway_account_project.project.id}It is quite possible to pass the public SSH keys as a variable in order to differentiate access between different environments (although this is not the case here, to keep the code as simple as possible).

I then created a network.tf file, in which I have the code for the network elements. I started by creating a private network (PN):

// Create the Private Networkresource "scaleway_vpc_private_network" "pn" { name = "${var.env_name}-private" project_id = scaleway_account_project.project.id}A minor particularity is the presence of the project_id that you will find in many resources. As I am working on a new project that I just created, my Terraform provider has as default project one of my old projects. So I had to specify this project each time I create a new resource.

I then created my Public Gateway. For this I needed a public IP and a DHCP configuration:

// Reserve an IP for the Public Gatewayresource "scaleway_vpc_public_gateway_ip" "gw_ip" { project_id = scaleway_account_project.project.id}// Create the DHCP rules for the Public Gatewayresource "scaleway_vpc_public_gateway_dhcp" "dhcp" { project_id = scaleway_account_project.project.id subnet = "${var.private_cidr.network}.0${var.private_cidr.subnet}" address = "${var.private_cidr.network}.1" pool_low = "${var.private_cidr.network}.2" pool_high = "${var.private_cidr.network}.99" enable_dynamic = true push_default_route = true push_dns_server = true dns_servers_override = ["${var.private_cidr.network}.1"] dns_local_name = scaleway_vpc_private_network.pn.name depends_on = [scaleway_vpc_private_network.pn]}This code required a new variable, private_cidr which I added to my variables file:

// CIDR for our PNvariable "private_cidr" { default = { network = "192.168.0", subnet = "/24" }}We can see here that I am using a JSON variable with two elements, network and subnet that I can call by specifying the element:

var.private_cidr.networkvar.private_cidr.subnetIt would be possible to make two different variables, but this very simple JSON is a good example of the power of Terraform.

Now I could create my private gateway and attach all the necessary information to it:

// Create the Public Gatewayresource "scaleway_vpc_public_gateway" "pgw" { name = "${var.env_name}-gateway" project_id = scaleway_account_project.project.id type = var.pgw_type bastion_enabled = true ip_id = scaleway_vpc_public_gateway_ip.gw_ip.id depends_on = [scaleway_vpc_public_gateway_ip.gw_ip]}// Attach Public Gateway, Private Network and DHCP config togetherresource "scaleway_vpc_gateway_network" "vpc" { gateway_id = scaleway_vpc_public_gateway.pgw.id private_network_id = scaleway_vpc_private_network.pn.id dhcp_id = scaleway_vpc_public_gateway_dhcp.dhcp.id cleanup_dhcp = true enable_masquerade = true depends_on = [scaleway_vpc_public_gateway.pgw, scaleway_vpc_private_network.pn, scaleway_vpc_public_gateway_dhcp.dhcp]}This code required a new pgw_type variable, to specify our Private Gateway type:

// Type for the Public Gatewayvariable "pgw_type" { default = "VPC-GW-S"}During our discussions, the customer expressed their need to have a bastion machine that will serve as both their administration machine and rebound machine. So I created a new bastion.tf file. This machine will have an extra disk and will be attached to our NP. It will also have a fixed IP outside the addresses reserved for DHCP.

// Secondary disk for bastionresource "scaleway_instance_volume" "bastion-data" { project_id = scaleway_account_project.project.id name = "${var.env_name}-bastion" size_in_gb = var.bastion_data_size type = "b_ssd"}// Bastion instanceresource "scaleway_instance_server" "bastion" { project_id = scaleway_account_project.project.id name = "${var.env_name}-bastion" image = "ubuntu_jammy" type = var.bastion_type // Attach the instance to the Private Network private_network { pn_id = scaleway_vpc_private_network.pn.id } // Attack the secondary disk additional_volume_ids = [scaleway_instance_volume.bastion-data.id] // Simple User data, may be customized user_data = { cloud-init = <<-EOT #cloud-config runcmd: - apt-get update - reboot # Make sure static DHCP reservation catch up EOT }}// DHCP reservation for the bastion inside the Private Networkresource "scaleway_vpc_public_gateway_dhcp_reservation" "bastion" { gateway_network_id = scaleway_vpc_gateway_network.vpc.id mac_address = scaleway_instance_server.bastion.private_network.0.mac_address ip_address = var.bastion_IP depends_on = [scaleway_instance_server.bastion]}This code requires the following variables:

// Instance type for the bastionvariable "bastion_type" { default = "PRO2-XXS"}// Bastion IP in the PNvariable "bastion_IP" { default = "192.168.0.100"}// Second disk size for the bastionvariable "bastion_data_size" { default = "40"}For their application, the customer needs a MySQL database. So we created a managed Instance, non-redundant because we’re in the development environment.

We started by generating a random password:

// Generate a custom passwordresource "random_password" "db_password" { length = 16 special = true override_special = "!#$%&*()-_=+[]{}<>:?" min_lower = 2 min_numeric = 2 min_special = 2 min_upper = 2}We then created our database using the password we just generated, and attached it to our PN. This is a MySQL 8 instance, which will be backed up every day with a 7 day retention.

resource "scaleway_rdb_instance" "main" { project_id = scaleway_account_project.project.id name = "${var.env_name}-rdb" node_type = var.database_instance_type engine = "MySQL-8" is_ha_cluster = var.database_is_ha disable_backup = false // Backup every 24h, keep 7 days backup_schedule_frequency = 24 backup_schedule_retention = 7 user_name = var.database_username // Use the password generated above password = random_password.db_password.result region = "fr-par" tags = ["${var.env_name}", "rdb_pn"] volume_type = "bssd" volume_size_in_gb = var.database_volume_size private_network { ip_net = var.database_ip pn_id = scaleway_vpc_private_network.pn.id } depends_on = [scaleway_vpc_private_network.pn]}Here the frequency and retention of backups are written in hard copy in the file, but it is quite possible to create the corresponding variables in order to centralize the changes to be made between the different environments.

This code requires the following variables:

// Database IP in the PNvariable "database_ip" { default = "192.168.0.105/24"}variable "database_username" { default = "kanta-dev"}variable "database_instance_type" { default = "db-dev-s"}variable "database_is_ha" { default = false}// Volume size for the DBvariable "database_volume_size" { default = 10}The database_is_ha variable allows us to specify whether our database should be deployed in standalone or replicated mode. It will be used mainly for the transition to production.

We proceeded in the same way for Redis, by creating a random password.

// Generate a random passwordresource "random_password" "redis_password" { length = 16 special = true override_special = "!#$%&*()-_=+[]{}<>:?" min_lower = 2 min_numeric = 2 min_special = 2 min_upper = 2}Then we created a managed Redis 7.0 cluster, attached to our Private Network:

resource "scaleway_redis_cluster" "main" { project_id = scaleway_account_project.project.id name = "${var.env_name}-redis" version = "7.0.5" node_type = var.redis_instance_type user_name = var.redis_username // Use the password generated above password = random_password.redis_password.result // Cluster Size, if 1, Stand Alone cluster_size = 1 // Attach Redis instance to the Private Network private_network { id = scaleway_vpc_private_network.pn.id service_ips = [ var.redis_ip, ] } depends_on = [ scaleway_vpc_private_network.pn ]}This code requires the addition of the following variables:

// Redis IP in the PNvariable "redis_ip" { default = "192.168.0.110/24"}variable "redis_username" { default = "kanta-dev"}variable "redis_instance_type" { default = "RED1-MICRO"}Our client wants to migrate to Kubernetes within the year. In order to smooth out their learning curve, especially with Terraform, we are already focusing on migrating their application to Instances. To do this, we therefore created two Instances on which the customer's Continuous Integration (CI) can push the application.

As we want two identical Instances, we used a count, which allows us to create as many Instances as we want.

We started by creating a secondary disk for our Instances:

// Secondary disk for application instanceresource "scaleway_instance_volume" "app-data" { count = var.app_scale project_id = scaleway_account_project.project.id name = "${var.env_name}-app-data-${count.index}" size_in_gb = var.app_data_size type = "b_ssd"}Then we added the Instance creation:

// Application instanceresource "scaleway_instance_server" "app" { count = var.app_scale project_id = scaleway_account_project.project.id name = "${var.env_name}-app-${count.index}" image = "ubuntu_jammy" type = var.app_instance_type // Attach the instance to the Private Network private_network { pn_id = scaleway_vpc_private_network.pn.id } // Attach the secondary disk additional_volume_ids = [scaleway_instance_volume.app-data[count.index].id] // Simple User data, may be customized user_data = { cloud-init = <<-EOT #cloud-config runcmd: - apt-get update - reboot # Make sure static DHCP reservation catch up EOT }}Then we attached our Instances to our PN:

// DHCP reservation for the application instance inside the Private Networkresource "scaleway_vpc_public_gateway_dhcp_reservation" "app" { count = var.app_scale gateway_network_id = scaleway_vpc_gateway_network.vpc.id mac_address = scaleway_instance_server.app[count.index].private_network.0.mac_address ip_address = format("${var.private_cidr.network}.%d", (10 + count.index)) depends_on = [scaleway_instance_server.bastion]}This code requires the following variables:

// Application instances typevariable "app_instance_type" { default = "PRO2-XXS"}// Second disk size for the application instancesvariable "app_data_size" { default = "40"}// Number of instances for the applicationvariable "app_scale" { default = 2}The app_scale variable allowed us to determine the number of Instances we want to deploy.

In order to make the client application available, we now needed to create a Load Balancer (LB).

We started by reserving a public IP:

// Reserve an IP for the Load Balancerresource "scaleway_lb_ip" "app-lb_ip" { project_id = scaleway_account_project.project.id}Then we created the Load Balancer, and attached it to our Private Network:

// Load Balancerresource "scaleway_lb" "app-lb" { project_id = scaleway_account_project.project.id name = "${var.env_name}-app-lb" ip_id = scaleway_lb_ip.app-lb_ip.id type = var.app_lb_type // Attache the LoadBalancer to the Private Network private_network { private_network_id = scaleway_vpc_private_network.pn.id dhcp_config = true }}We then created a backend, attached to our Load Balancer, that will redirect the requests to port 80 of the Instances. At first, we just did a TCP Health Check. We can change this once the application is functional and validated.

As we used a count when creating the instances, we use a * here to add all the Instances:

// Create the backendresource "scaleway_lb_backend" "app-backend" { name = "${var.env_name}-app-backend" lb_id = scaleway_lb.app-lb.id forward_protocol = "tcp" forward_port = 80 // Add the application instance IP as backend server_ips = scaleway_vpc_public_gateway_dhcp_reservation.app.*.ip_address health_check_tcp {}}Finally, we created our frontend, attached to our Load Balancer and listening on port 27017. This port will have to be modified when our application is ready to be moved to production.

// Create the frontendresource "scaleway_lb_frontend" "app-frontend" { name = "${var.env_name}-app-frontend" lb_id = scaleway_lb.app-lb.id backend_id = scaleway_lb_backend.app-backend.id inbound_port = 27017}This code requires the following variables:

variable "app_lb_type" { default = "LB-S"}To complete our infrastructure, we still need an Object Storage bucket. This last point is the most complex. In fact, when we create an API key, we choose a project by default, and our API key will always point to this project to access the Object Storage API.

We started by creating a new IAM application, which means that it will only have programmatic access to our resources.

resource "scaleway_iam_application" "s3_access" { provider = scaleway name = "${var.env_name}_s3_access" depends_on = [ scaleway_account_project.project ]}We then attached a policy to it. As this is a development environment, we are working with a FullAccess policy, which gives too many rights to our user. As the access rights are limited to our project, this is not a major concern, but this part will have to be modified before deploying in pre-production.

resource "scaleway_iam_policy" "FullAccess" { provider = scaleway name = "FullAccess" description = "gives app readonly access to object storage in project" application_id = scaleway_iam_application.s3_access.id rule { project_ids = [scaleway_account_project.project.id] permission_set_names = ["AllProductsFullAccess"] } depends_on = [ scaleway_iam_application.s3_access ]}We then created our user’s API keys, specifying the default project which will be used to create our Object Storage bucket.

resource "scaleway_iam_api_key" "s3_access" { provider = scaleway application_id = scaleway_iam_application.s3_access.id description = "a description" default_project_id = scaleway_account_project.project.id depends_on = [ scaleway_account_project.project ]}We could now define a new provider that uses our new API keys. As it is a secondary provider, we gave it an alias, s3_access so that it is immediately recognizable.

By default in Terraform, when you have 2 providers defined, the one without an alias is the default provider.

// We create a new provider using the api key created for our applicationprovider "scaleway" { alias = "s3_access" access_key = scaleway_iam_api_key.s3_access.access_key secret_key = scaleway_iam_api_key.s3_access.secret_key zone = "fr-par-1" region = "fr-par" organization_id = "organization_id"}We could now create our bucket, specifying the provider we wanted to use:

// Create the Bucketresource "scaleway_object_bucket" "app-bucket" { provider = scaleway.s3_access name = "kanta-app-${var.env_name}" tags = { key = "bucket" } // Needed to create/destroy the bucket depends_on = [ scaleway_iam_policy.FullAccess ]}As we have seen throughout our deployment, our variables file allowed us to centralize most of the changes we will have to make when we want to deploy the pre-production and production environments.

The main modifications are the following:

Today, Kanta has begun experimenting with Scaleway, but their teams are still new to the platform. We will therefore accompany them, through meetings with our Solutions Architect and Professional Services, in the definition of their architecture in order to achieve an optimal solution that truly meets their needs.

As we have seen throughout the project, our support will also allow them to discover and implement infrastructure-as-code solutions such as Terraform in order to quickly and efficiently deploy Scaleway resources. In addition, thanks to the flexibility of Terraform, Kanta will be able to deploy its production and pre-production environments very efficiently, based on what we have already deployed, once the development environment has been validated.

Although the client would most certainly have succeeded in migrating to Scaleway, the support we provided allowed them to avoid numerous iterations on their infrastructure moving forwards.

Our mission, to simplify and accelerate migrations to Scaleway, is as such a success.

The Scale program allows the most ambitious companies, like Kanta, to be accompanied in the migration of their infrastructure to Scaleway, by benefiting from personalized technical support.

Kanta is a startup based in Caen, France, specialized in fighting money-laundering as a service for accountants. It offers an innovative SaaS tool that allows for automatization of anti-money-laundering processes, whilst guaranteeing conformity with industry standards and quality controls. Thanks to its solution, accountants can improve the efficiency and reliability of their efforts to reduce fraud, money-laundering and terrorism-funding.

To launch an application in the cloud, you need to be able to access the servers on which it is hosted. We will learn how to do that in this article.

If you want to quickly and easily set up a cloud infrastructure, one of the best ways to do it is to create a Terraform repository. Learn the basics to start your infrastructure on Terraform.

Terraform is an infrastructure as code tool, and in this hands-on guide, we will learn how to turn an instance into a module to deploy our infrastructure.