VAE: giving your Autoencoder the power of imagination

We will take a detailed look at the Variational Autoencoder: a generative model based on its more commonplace sibling, the Autoencoder (which we will devote some time to below as well).

In this article we are going to look at how to set up a data annotation platform for image and video files stored in Scaleway object storage, using the open source CVAT tool.

We all heard the phrase "data is the new oil". Data has certainly been fueling many of the recent technological advancements, yet the comparison holds beyond this. Much like crude oil, data needs to be processed before it can be put to use. The processing stages typically include cleanup, various transformations, and, depending on the data and the use case, manual annotation. The demand for the latter is high in the fields of computer vision and natural language processing (NLP): in other words, the data formats that are most natural for humans, as opposed to the structured data that is best viewed in table form. Manual data annotation is a time-consuming and expensive process. To make matters worse, deep artificial neural networks, the current state of the art for both computer vision and NLP, are the algorithms that require the largest amounts of data to train. Efficient annotation tools with time-saving extrapolation features and other types of automation, go a long way towards what is arguably the most crucial stage of the machine learning project's life cycle: building the training dataset.

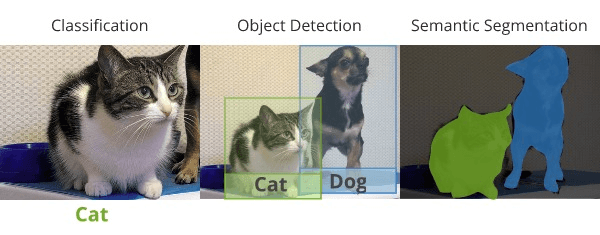

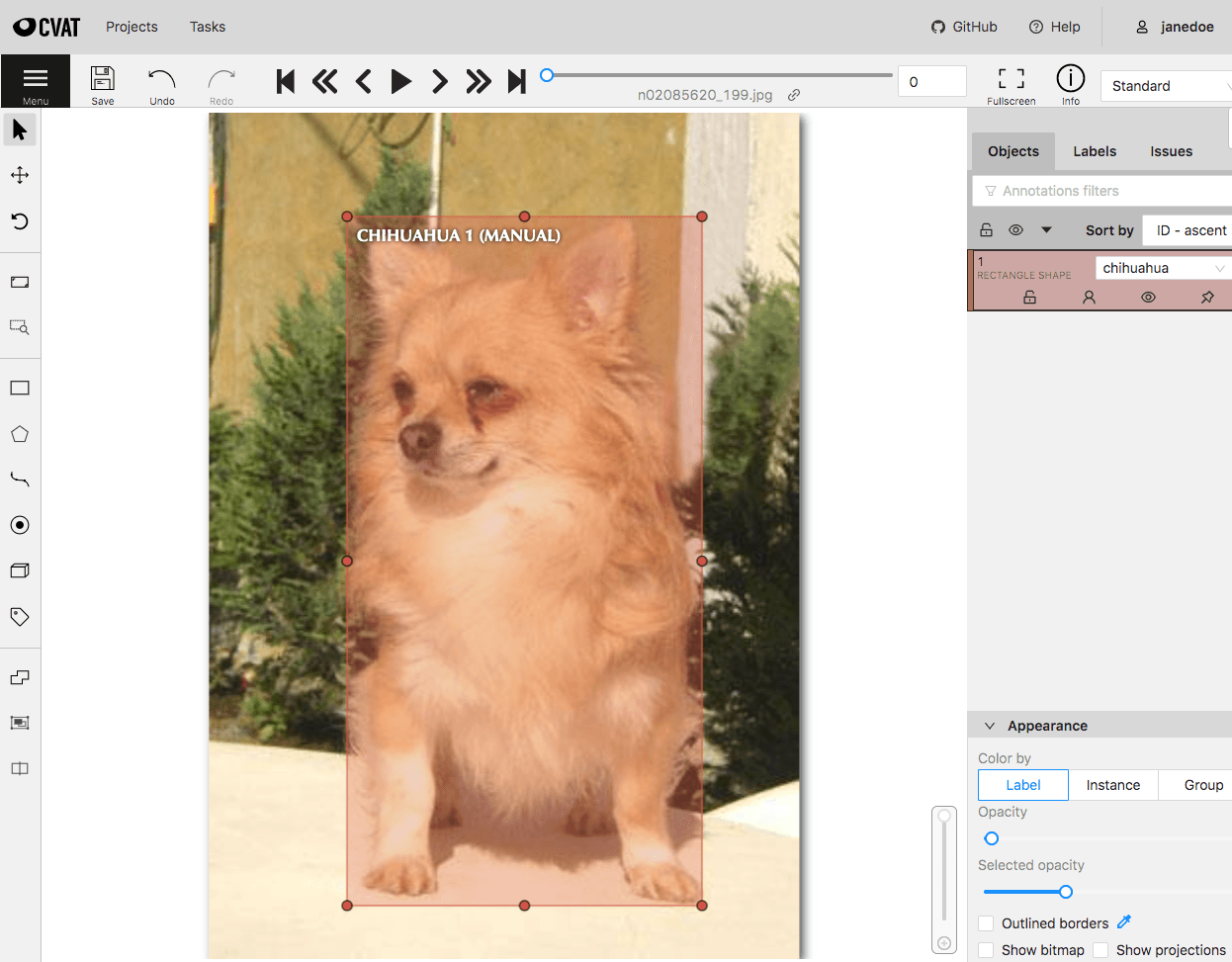

CVAT (short for the Computer Vision Annotation Tool) is an open-source image and video annotation platform that came out of Intel. It supports the most common computer vision tasks: image-level classification, as well as object detection and image segmentation - where areas of interest on an image are selected via bounding boxes and polygonal (or pixel-wise) image masks respectively.

In addition to providing a Chrome-based annotation interface and basic user management features, CVAT cuts down on the number of manual annotations needed by automating a part of the process. In this blog post, we are going to focus on how to install CVAT on the Scaleway public cloud.

The most straightforward way of running CVAT on a cloud would be to simply start an instance (a virtual machine hosted by the cloud provider), and follow the Quick Installation Guide available as part of the CVAT documentation. Once installed, you can access CVAT by connecting to the instance via SSH tunneling, and going to localhost:8080 in the Google Chrome browser. You can then upload images and videos from your local server, and proceed with the annotation just as you would have in case of a local installation.

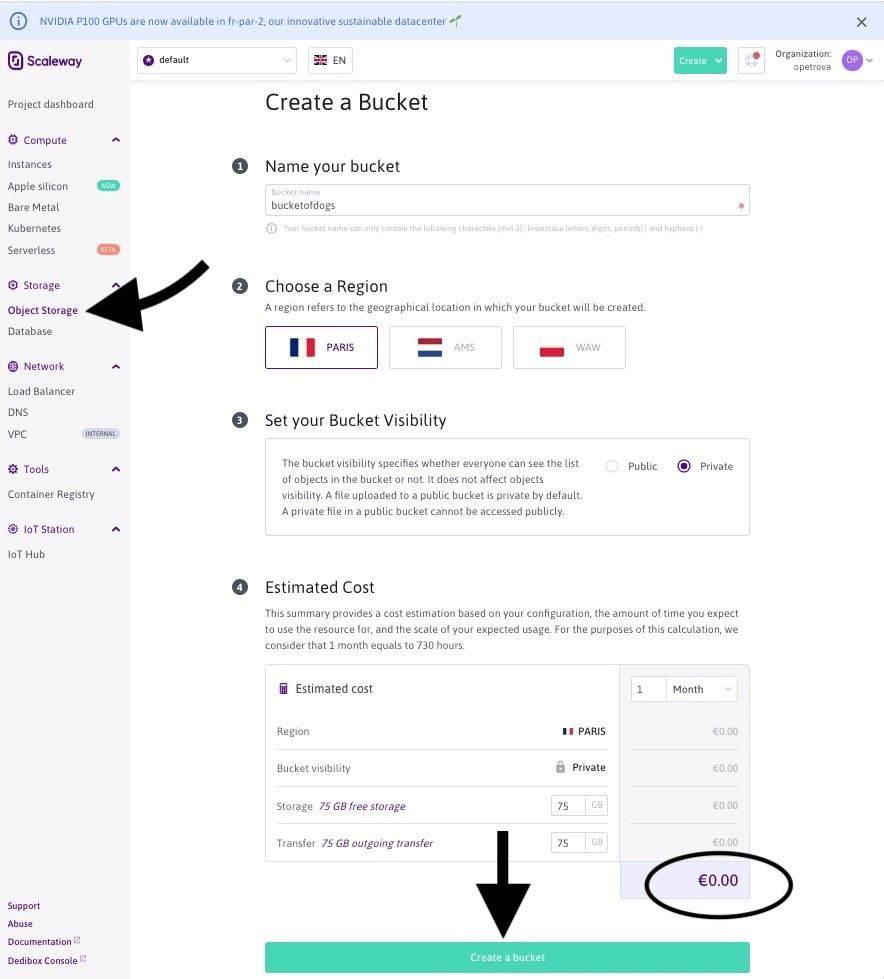

However, going about it in this notably uncloud-like way brings you none of the advantages of the cloud computing. First, think of your data storage. Computer vision projects require a lot of training data, so scalability and cost-efficiency are must-haves. Object storage has become the industry's method of choice for storing unstructured data. With virtually no limit on the size and number of files to be stored, generous free tiers (e.g. Scaleway offers 75GB of free object storage every month), and high redundancy to ensure the safety and availability of your data, it is hard to think of a better place to store "the new oil".

Depending on the size of your labeling workforce, you might also want to enable autoscaling of your annotation tool. For the time being, let us assume that the data annotation operation that you are running is manageable enough that a single instance running CVAT will suffice. Still, you do not necessarily want to give every annotator SSH access to your instance. This is something that we are also going to discuss how to do in the next section.

As we have established in the previous section, there are two cloud resources that we need to take our data annotation to the next level: an object storage bucket and an instance. Here's a step-by-step guide to procuring them:

Storage / Object Storage tab in the Scaleway console.

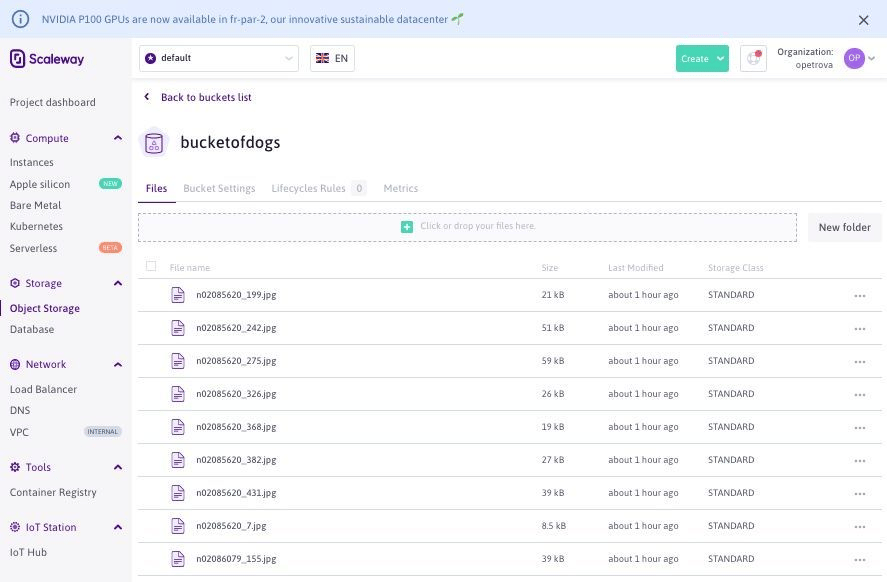

Once your bucket is created, you can add the files that you would like to have labeled to it - e.g. via the drag and drop interface available through the Scaleway console. Let us fill our bucketofdogs with some photos of, well, dogs:

One of the pieces of information that you will be needing later on is the Bucket ID. The bucket's ID can be read off the Bucket Settings tab above, but is in fact none other than the bucket's name (i.e. bucketofdogs in my example).

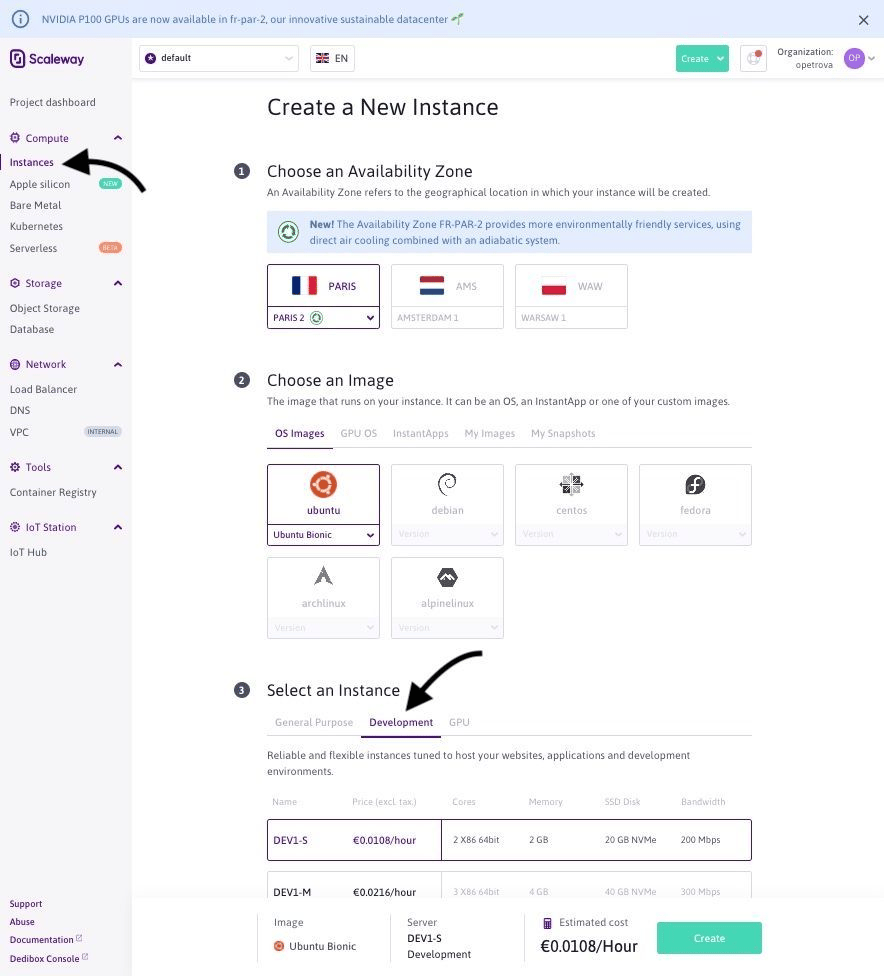

4. Now that our precious dog photos are safely stored in a fancy data center, we are going to need an instance. Scaleway offers a wide range of instances suitable for different purposes. If you want to make use of CVAT's auto-annotation features (a topic for another blogpost), I would advise you to get one of the high end GPU instances. For the basic manual annotation use case, let us start with the dev range:

Once your instance is created, you will arrive at its Overview page, where, among other things, you will find your Instance ID . Make note of it. On the same page, you will see the following SSH command: ssh root@[Public IP of your instance]. At this point, you should use it to SSH to your instance and proceed with the installation of CVAT.

Let us start with the prerequisites:

sudo apt-get updatesudo apt-get --no-install-recommends install -y \ apt-transport-https \ ca-certificates \ curl \ gnupg-agent \ software-properties-common \ s3fscurl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable"sudo apt-get updatesudo apt-get --no-install-recommends install -y docker-ce docker-ce-cli containerd.iosudo apt-get --no-install-recommends install -y python3-pip python3-setuptoolssudo python3 -m pip install pip --upgradesudo python3 -m pip install setuptools docker-composegit clone https://github.com/openvinotoolkit/cvat.git cvatcd cvatThe next step is where the Bucket ID and the API Key from the previous steps will come in handy:

# Mount the object storage bucketSCW_BUCKET_ID=<your bucket ID from the bucket profile page>SCW_ACCESS_KEY=<your access key found in credentials page in Scaleway console>SCW_SECRET_KEY=<your secret key found in credentials page in Scaleway console>FOLDER_TO_MOUNT=/mnt/s3-cvatmkdir -p $FOLDER_TO_MOUNTecho "${SCW_ACCESS_KEY}:${SCW_SECRET_KEY}" > ${HOME}/.passwd-s3fschmod 600 ${HOME}/.passwd-s3fss3fs ${SCW_BUCKET_ID} ${FOLDER_TO_MOUNT} -o allow_other -o passwd_file=${HOME}/.passwd-s3fs -o use_path_request_style -o endpoint=fr-par -o parallel_count=15 -o multipart_size=128 -o nocopyapi -o url=https://s3.fr-par.scw.cloudsudo chmod 444 $FOLDER_TO_MOUNT/*Now you should create a file called docker-compose.override.yml (in the current cvat folder), substitute your instance ID below and paste the following into the YML file:

version: '3.3'services: cvat_proxy: environment: CVAT_HOST: <your instance ID from the instance information page>.pub.instances.scw.cloud cvat: environment: CVAT_SHARE_URL: 'Mounted from /mnt/share host directory' volumes: - cvat_share:/home/django/share:rovolumes: cvat_share: driver_opts: type: none device: /mnt/s3-cvat o: bindAll that is left now is to launch CVAT and create a super user account (that is, the one who will have access to the Django administration panel of the CVAT site):

docker-compose -f docker-compose.yml -f docker-compose.override.yml up -d --builddocker exec -it cvat bash -ic 'python3 ~/manage.py createsuperuser'You can access your shiny new CVAT server at <your instance ID from the instance information page>.pub.instances.scw.cloud:8080 . You can either login with the super user account that you created, or create a new account and use it to login:

Note: as of March 2021, the official distribution of CVAT is set up in such a way that newly created non-admin accounts have immediate access to all of the annotation tasks available on the machine. These permissions will be updated in a future release of CVAT, but for now you can make use of the workarounds mentioned in GitHub issues 1030, 1114, 1283, and 2702. In the meantime, make sure to only give the link to your instance to those whom you do not mind accessing CVAT on your server.

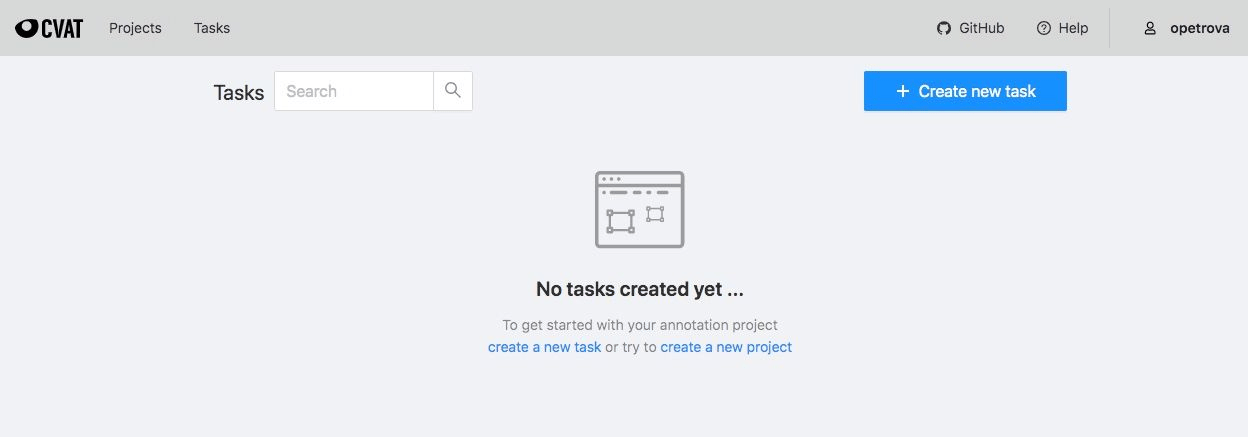

Once you log in, you will be able to see the list of annotation tasks that exist on your instance:

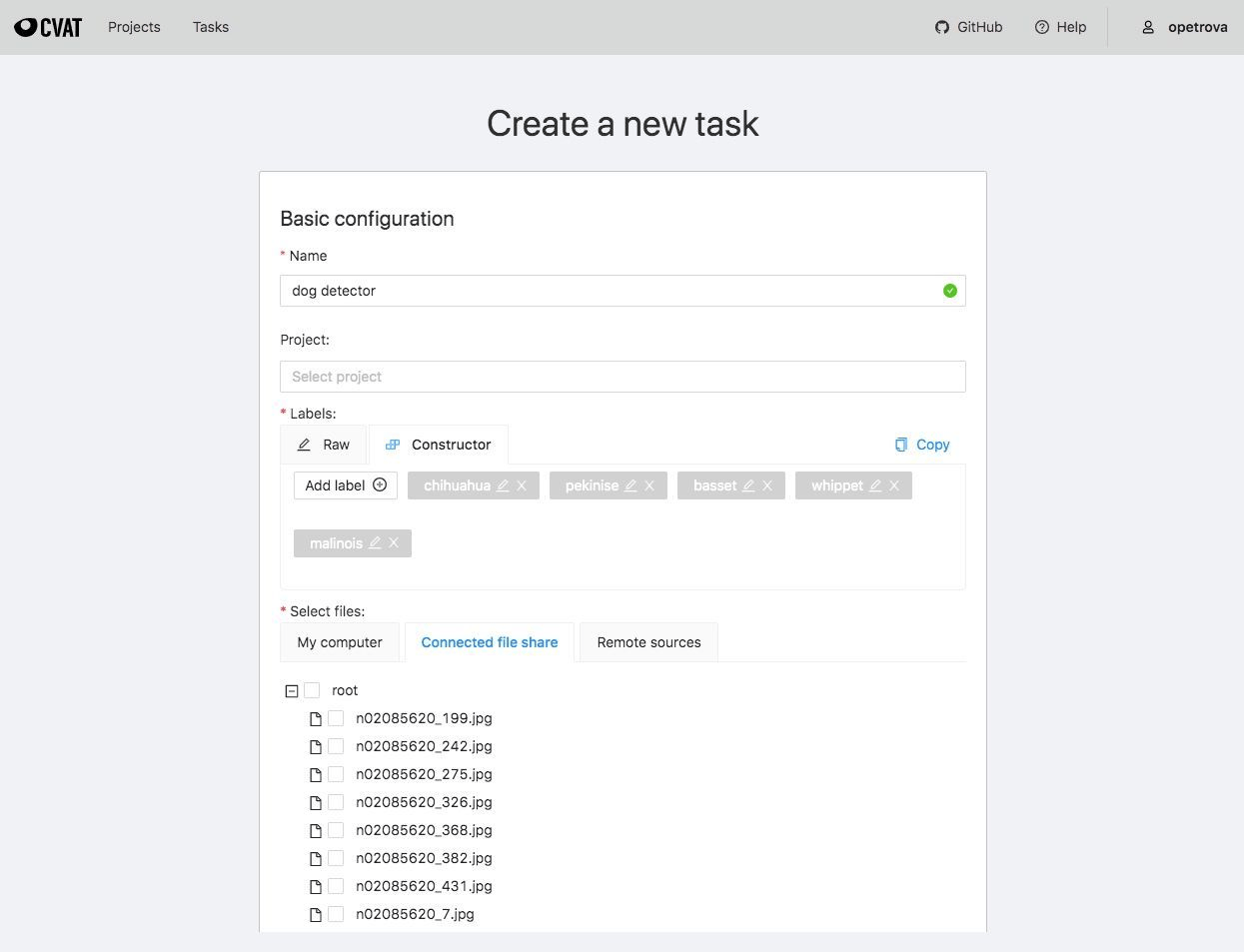

When you try to create a new task, you will find that the image files that you uploaded to your object storage bucket are accessible from the Connected file share tab:

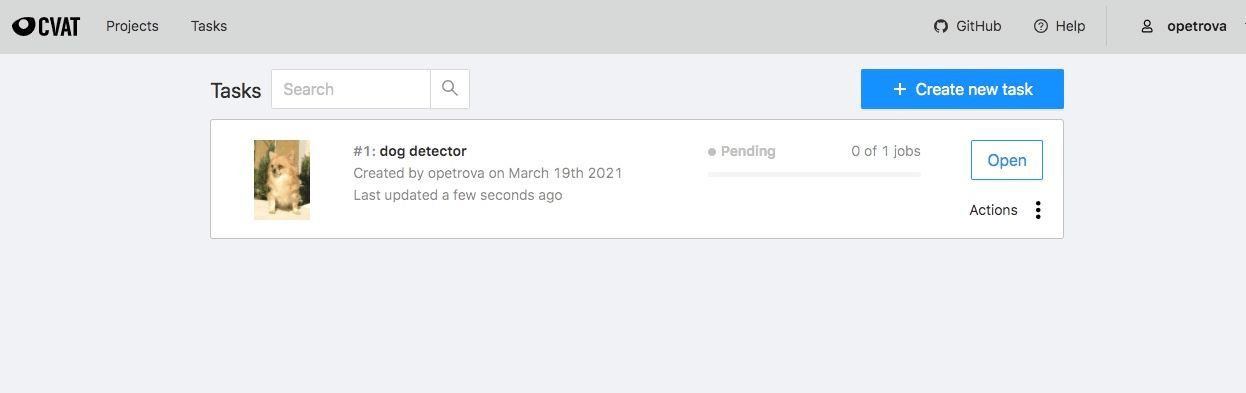

The new task will now appear when you (or another user) logs into CVAT:

Time to get labeling!

Who is a good dog [detector]?

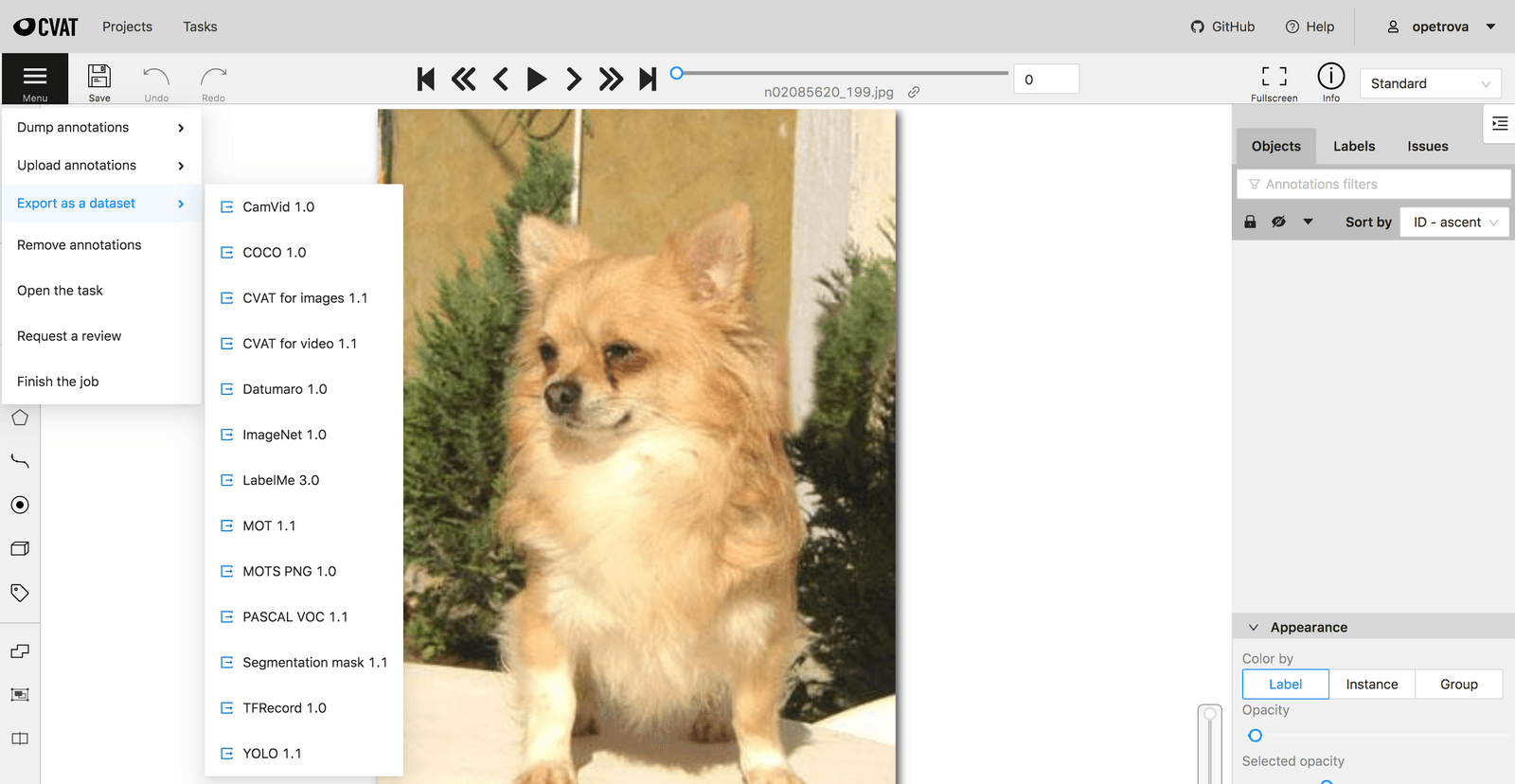

Once you are done labeling your dataset, you can export the annotations in the supported format of your choice:

We will take a detailed look at the Variational Autoencoder: a generative model based on its more commonplace sibling, the Autoencoder (which we will devote some time to below as well).

You have just spent weeks developing your new machine learning model, you are finally happy enough with its performance, and you want to show it off to the rest of the world.

In this article, we will talk about active learning and how to apply the concepts to an image classification task with PyTorch.