Understanding Kubernetes Autoscaling

Kubernetes provides a series of features to ensure your clusters have the right size to handle any load. Let's look into the different auto-scaling tools and learn the difference between them.

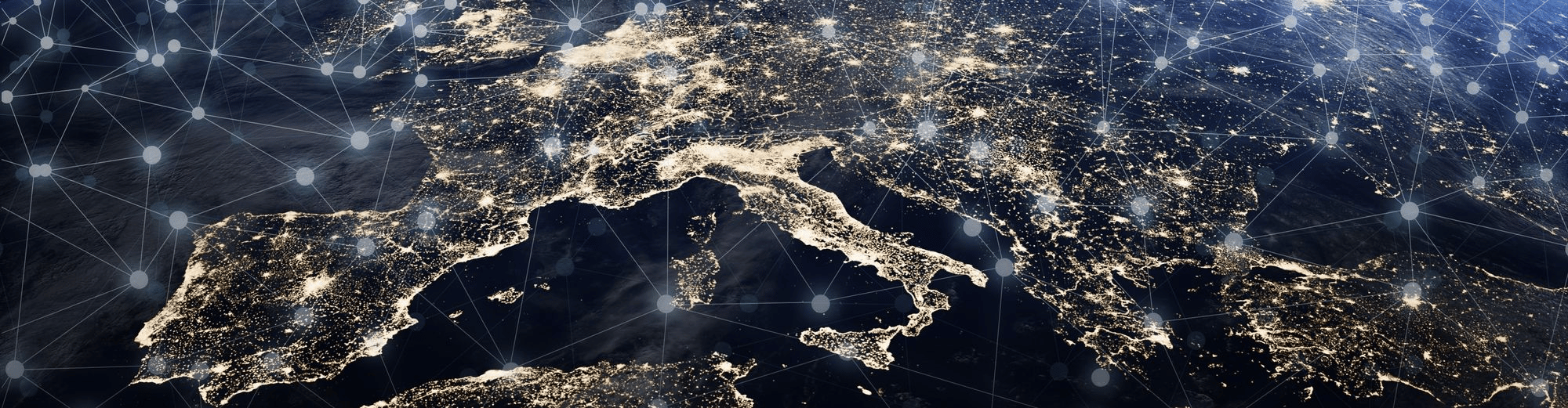

In this blog post, we attempt to demystify the topic of network latency. We take a look at the relationship between latency, bandwidth and packet drops and the impact of all this on "the speed of the internet". Next, we examine factors that contribute to latency including propagation, serialization, queuing and switching delays. Finally, we consider the more advanced topic of the relationship between latency and common bandwidth limitation techniques.

While the concept of bandwidth is easily understood by most IT engineers, latency has always been something of a mystery. At first glance, it might seem that it doesn't matter how long it takes for a chunk of data to cross the Atlantic ocean, as long as we have enough bandwidth between the source and destination. What's the big deal if our 100GE link is one kilometer or one thousand kilometers long, it's still 100GE isn't it?

This would be correct if we never experienced any packet loss in the network. Latency impact is all about flow and congestion control (which these days is mainly part of TCP). When one end sends a chunk of data to the other, it usually needs an acknowledgment that the data has been received by the other end. If no acknowledgment is received, the source end must resend the data. The greater the latency, the longer it takes to detect a data loss.

In fact, packet drops are part of the very nature of IP networks. Packets may be dropped even in a perfectly-operating network that has no issues with congestion or anything else.

We can regard network latency as a buffer for the data "in fly" between the source and the destination, where packets could possibly disappear. The greater the latency, the larger this buffer is, and the more data is in fly. But being in fly, the data is like Schrodinger's cat: neither sender nor receiver know if it's safely traveling towards its destination or already dropped somewhere along the way. The bigger the amount of data in this unknown state, the longer it takes to detect a loss event and recover from it. Consequently, the lower the effective bandwidth between the source and destination.

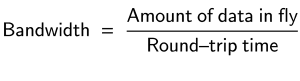

The maximum theoretical bandwidth that can be achieved is determined as follows:

As the Round Trip Time (RTT) can not be changed for a given source/destination pair, if we want to increase the available bandwidth we have to increase the amount of data in fly. Technically speaking, to achieve a given bandwidth over a route with a given RTT (Delay), the TCP transmission window (amount of data in fly) must be equal to the so-called Bandwidth-Delay Product (BDP):

So the bandwidth in packet networks comes at the cost of entropy (the amount of data in the unknown state being transported between the source and the destination) and is a linear function of network latency.

As noted above, packet drops are normal on the Internet due to the nature of packet networks. Modern, sophisticated congestion control schemes can increase the amount of data in fly (TCP transmission window) to large values quickly, in order to reach maximum bandwidth. However, when a drop is experienced, the time needed to detect that loss is still a function of latency.

Finally, all this translates into "the speed of the Internet". We can see the difference by downloading a 1GB file from a test server in Paris and then the same file in a version with 90 ms of additional simulated latency:

(You will need a good internet connection to carry out this test)

The following guidelines can be used to roughly estimate the impact of latency:

| RTT | User Experience |

|---|---|

| <30 ms | little or no impact on user experience |

| 30-60 ms | still OK but noticeable for certain applications (gaming etc.) |

| 60-100 ms | mostly acceptable, but users do start to feel it: websites a little slower, downloads not fast enough, etc |

| 100-150 ms | user feels typically that "the Internet is slow". |

| >150 ms | "it works", but is not acceptable for most commercial applications these days |

These numbers are subjective and may vary for different applications and user groups. They also depend on the user's habitual location. For example, people living in New Zealand or North-East Russia are somewhat used to higher latency for the majority of internet resources. They can tolerate "slower Internet", while US West Coast users who are more used to "fast internet" are often unhappy with the RTT to the European resources.

In modern networks, the primary source of latency is distance. This factor is also called propagation delay. The speed of light in a fiber is roughly 200,000 km per second, which gives us 5 ms per 1000 km single-direction and the mnemonic rule of 1 ms of round-trip time per 100 km.

However, fibers rarely follow as-the-crow-flies lines on the map, so the true distance is not always easy to estimate. While the routes of submarine cables are more or less straightforward, metro fiber paths in highly urbanized areas are anything but. And so, real-world RTT values can be roughly determined by coupling the above rule with the following considerations:

See the examples below.

Paris—New York

5,800 km, 58×1.5 = 87 ms of RTT

par1-instance$PING 157.230.229.24 (157.230.229.24) 56(84) bytes of data.64 bytes from 157.230.229.24: icmp_seq=1 ttl=52 time=84.0 ms64 bytes from 157.230.229.24: icmp_seq=2 ttl=52 time=83.9 ms64 bytes from 157.230.229.24: icmp_seq=3 ttl=52 time=84.0 ms --- 157.230.229.24 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2002msrtt min/avg/max/mdev = 83.992/84.015/84.040/0.237 msParis—Singapore

11,000 km, 110×1.5 = 165 ms

par1-instance$ ping 188.166.213.141 -c 3PING 188.166.213.141 (188.166.213.141) 56(84) bytes of data.64 bytes from 188.166.213.141: icmp_seq=1 ttl=49 time=158 ms64 bytes from 188.166.213.141: icmp_seq=2 ttl=49 time=158 ms64 bytes from 188.166.213.141: icmp_seq=3 ttl=49 time=158 ms --- 188.166.213.141 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2002msrtt min/avg/max/mdev = 158.845/158.912/158.991/0.330 msParis—Moscow

2,500 km, 25×2 = 50 ms of RTT

par1-instance$ ping www.nic.ru -c 3PING www.nic.ru (31.177.80.4) 56(84) bytes of data.64 bytes from www.nic.ru (31.177.80.4): icmp_seq=1 ttl=52 time=50.5 ms64 bytes from www.nic.ru (31.177.80.4): icmp_seq=2 ttl=52 time=50.0 ms64 bytes from www.nic.ru (31.177.80.4): icmp_seq=3 ttl=52 time=50.3 ms --- www.nic.ru ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2002msrtt min/avg/max/mdev = 50.041/50.314/50.522/0.328 msParis—Amsterdam

450 km, 4.5×2 = 9 ms of RTT

par1-instance$ ping 51.15.53.77 -c 3PING 51.15.53.77 (51.15.53.77) 56(84) bytes of data.64 bytes from 51.15.53.77: icmp_seq=1 ttl=52 time=9.58 ms64 bytes from 51.15.53.77: icmp_seq=2 ttl=52 time=10.2 ms64 bytes from 51.15.53.77: icmp_seq=3 ttl=52 time=9.71 ms --- 51.15.53.77 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2003msrtt min/avg/max/mdev = 9.589/9.852/10.250/0.308 ms

While distance is the main source of latency, other factors can also delay data propagation within modern networks.

The second most crucial latency factor is the so-called serialization delay. Some time ago it was actually competing with distance for first prize, but nowadays is becoming less significant.

Both end-point and intermediate devices (routers, switches) are ultimately just computers, which store data chunks in memory before sending them to the transmission media (e.g. optical fiber, copper wires, or radio). In order to send these packets to the network interfaces, computers need to serialize them, i.e. encode the data bits into a sequence of electromagnetic signals, suitable for transmission over the interface media at a constant rate. The time the data spends in a buffer before it gets sent down the wire is called serialization delay.

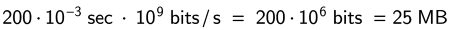

Let's imagine that we have a 1500 byte-long Ethernet frame which needs to be sent down a 1GE interface:

12 µs (microseconds) are needed to put a 1500-byte frame onto the wire using a 1GE interface. For 1GB of data the time will be around 8 seconds in total. This is the time the data spends in buffers in order to get signaled to the transmission media. The higher the interface bandwidth, the lower the serialization delay.

The opposite is true, as well. For lower-rate interfaces like xDSL, WiFi, and others, the serialization delay will be higher and so this factor becomes more significant.

Queuing Delay

The astute reader might notice that a 48-byte ICMP Echo Request packet (plus the Ethernet overhead) should take much less time to get serialized than a 1500-byte frame. This is correct, however, usually, each link is used for multiple data transfers "at the same time", so a ping via an "empty" ADSL or WiFi link will give very different RTT values than a ping in parallel with a large file download.

This phenomenon is known as queuing delay. At the time of low-speed interfaces, it would severely affect small packets used by latency-sensitive applications like VoIP, transmitted along with large packets carrying file transfers. When a large packet is being transferred, smaller packets behind are delayed in the queue.

Typically, today's home and mobile internet users experience somewhere from 5 to 10 milliseconds of latency added by their home WiFi and last-mile connection, depending on the particular technology.

Serialization and queuing delays can be compared with what people experience when getting onto an escalator. The escalator is moving at a constant speed, which can be compared with the interface bit-rate, and the crowd just behind it is the buffer. You can notice that even if there is nobody around, when a group of 3 or 4 people comes to the escalator they need to wait for each other to get onto it. A similar phenomenon can be observed on highway exits where vehicles must slow down. During busy hours this creates traffic jams.

Sticking with this analogy, we can imagine that you want to drive from your home to some place 1000 km away. The following factors will contribute to the total time it takes you to make this trip:

This one is something of a false latency factor in real-life computer networking. Once a router receives a packet, a forwarding decision must be taken in the router data-plane in order to choose the next interface to which the packet should be sent. The router extracts bits from the packet header and uses them as a key to perform the forwarding lookup. In fact, modern packet switching chipsets perform this lookup within sub-microsecond times. Most modern switches and routers operate at a near wire rate, which means that they can perform as many forwarding lookups per second as the number of packets they can receive on the interfaces. There might be special cases and exceptions, but generally when a router cannot perform enough lookups to make a forwarding decision for all arriving packets, those packets are dropped rather than delayed. So in practical applications, the switching delay is negligible as it is several orders of magnitude lower than the propagation delay.

Nevertheless, some vendors still manage to position their products as "low-latency", specifically highlighting switching delay.

Traditionally in residential broadband (home internet) and B2B access networks, the overall bandwidth per subscriber was determined by the access interface bit-rate (ADSL, DOCSIS, etc). However, Ethernet is now becoming more and more popular. In some countries where the legacy last-mile infrastructure was less developed, and aerial links were not prohibited in communities, copper Ethernet became the primary access technology used by broadband ISPs. In datacenter networking we also use 1GE, 10GE, 25GE, and higher rate Ethernet as access interfaces for servers. This creates a problem of artificial bandwidth limitation, where bandwidth must be limited to that which is stated in the service agreement.

Two main techniques exist to achieve this goal.

Traffic shaping technique is based on the so-called leaky bucket algorithm, which delays packets in a buffer in order to force flow-control mechanisms to decrease the amount of data in fly and therefore reduce the effective bandwidth. When the buffer gets full, the packets are dropped, forcing TCP to slow down further.

As such, this technique effectively reduces bandwidth by increasing the queuing delay, which consequently increases end-to-end latency. Residential broadband Internet subscribers or B2B VPN customers can often observe an additional delay in the order of several milliseconds which is added by their ISP's broadband access router in order to enforce service agreement bandwidth rate.

This technique is considered more straightforward than the previous one, and is cheaper to implement in hardware as it doesn't require any additional buffer memory. Policing is based on the so-called token bucket algorithm and employs the notion of a burst. When the amount of transferred data bypasses a certain configured limit, packets get dropped, and the burst counter is incremented at constant rate, corresponding to the desired bandwidth.

Choosing the right value for the policer burst size has always been a mystery for network engineers, leading to many misconfigurations and mistakes. While many guides still recommend setting the burst size as the amount of data, transferred in 5 or 10 ms at the outgoing interface rate, this recommendation is not applicable for modern high-speed interfaces like 10/100GE and higher. This is because it is based on the hypothesis that the serialization delay is the most important factor of the overall end-to-end latency. As discussed above, this hypothesis doesn't hold nowadays, as the interface rates have risen dramatically during the last decade, meaning that the most important latency factor now is propagation delay.

So if we want a customer to be able to communicate with internet resources, let's say, 200 ms away, the policer burst size must be equal or greater than the amount of data, transferred at the desired rate in 200 ms. If we want to limit the customer's bandwidth to 1 Gbps we should calculate the burst-size as follows:

Setting the burst size at less than this amount will impact communication with Internet resources hosted far away from customers. This is a known recurrent problem in some regions which are rather remote from major internet hubs: if an ISP somewhere in Kamchatka or South America sets a burst size which doesn't accommodate those 200-250 milliseconds of traffic, their customers have trouble communicating with resources hosted in Frankfurt or Seattle. The same applies to hosting providers: lower policer burst sizes applied to these servers will make their services unusable for end-users on remote continents.

Kubernetes provides a series of features to ensure your clusters have the right size to handle any load. Let's look into the different auto-scaling tools and learn the difference between them.

SaaS solutions are mainly built on two types of architectures: Multi-instance on one side and multi-tenant on the other, “tenant” refers to the team or organization of your customers.

Scaleway just released its Block Storage in public beta and it is a great opportunity for us to explain the main differences between Block, File and Object storage.