Object Storage - What Is It? (1/3)

In this series of articles, we will start with a wide description of the Object Storage technology currently in production at Scaleway.

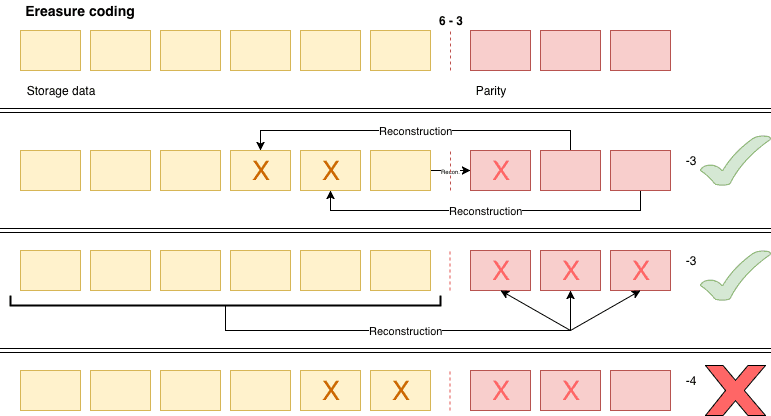

Object storage uses Erasure Code (EC) which guarantees that your data is more secure within our cluster than on your hard disk.

This technology allows us to store your file on several hard disks to tolerate the loss of one or several hard disks. In addition, we can spread the workload among several disks to reduce the risks of saturation.

We split your file into several parts and create additional parts. They are called parities.

Each parity is computed from the original parts which allows to build or rebuild any part of the file in case it becomes corrupted. We can recreate lost parities from the parts of the file using matrix computation. You can see those parities as spare pieces to rebuild your file :)

Those parities create an overhead to ensure the reliability of your data. Of course, you are not charged for those parts. You only pay for the data parts. The amount of original file parts and the amount of parities are the main caracteristics of an Erasure Code.

For example:

In each of the two examples, you will have to lose 4 parts to no longer be able to rebuild a file.

But why not use the 12+3 rule if it generates less overhead?

The answer to this question is statistics, because they admit that you have a larger number of discs compared to your set.

Let's say you are on a set of 100 disks, if you are in 6+3 you have 9% disks that are affected by the storage of your file, while if you are in 12+3, you then have 15% disks affected by the storage of your data.

So you have a statistically better chance when you lose a second disk, whether it is in the erasure code 12+3 rule or a 6+3 one.

You are therefore statistically more likely to lose the data at 12+3 than at 6+3.

We use different types of erasure coding, we even have x3 replicates, i.e. your data is stored in its entirety 3 times.

This is equivalent to storing 3GB for 1GB sent by our customers.

We use different rules that prioritize IOPS (storage media IN/OUT operation), so that the experience is improved for everyone.

Now that we have seen how erasure coding works. Let see what happens when you send an object to the object storage.

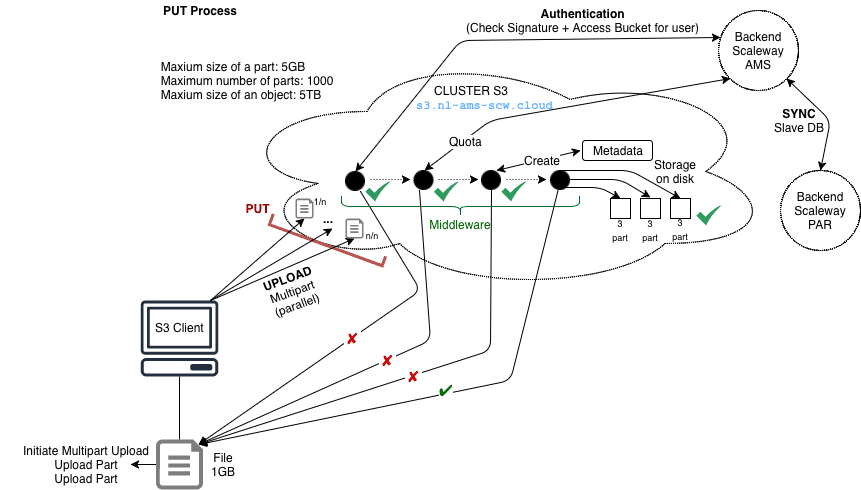

A typical Object Storage compatible tool such as rclone will support Multipart Upload. This algorithm will split your files into many smaller parts. Those parts will be sent independently to the object storage using Amazon S3 protocol. By doing so, when a file corruption happens in the transfer only the corrupted part is resend to the object storage and not the complete file.

In the case of smaller file, it can be send in one part.

There are some limits:

So the maximum size of an object stored is 1000 * 5 GB = 5 TB

Your Amazon S3 compatible client calculates a signature before sending each part to ensure that when we receive it, we have the same footprint.

If the signature does not match the one issued by your client, our cluster will then ask you to re-upload the corrupted part.

This option is configurable in each Amazon S3 compatible client, it allows in case of failure or minimal unavailability of our cluster to restart the operation without aborting the current upload.

We then create metadata for each part you send us. These allow us to know exactly where your data is located by reducing the number of interactions with the entire cluster.

We naturally use the fastest storage medium (NVMe) for this type of data.

We also calculate a notion of distances, which then allows us to define rules on the storage of the data.

We can define that two parts cannot be stored on the same disk, the same machine, the same bay, the same room, or the same datacenter.

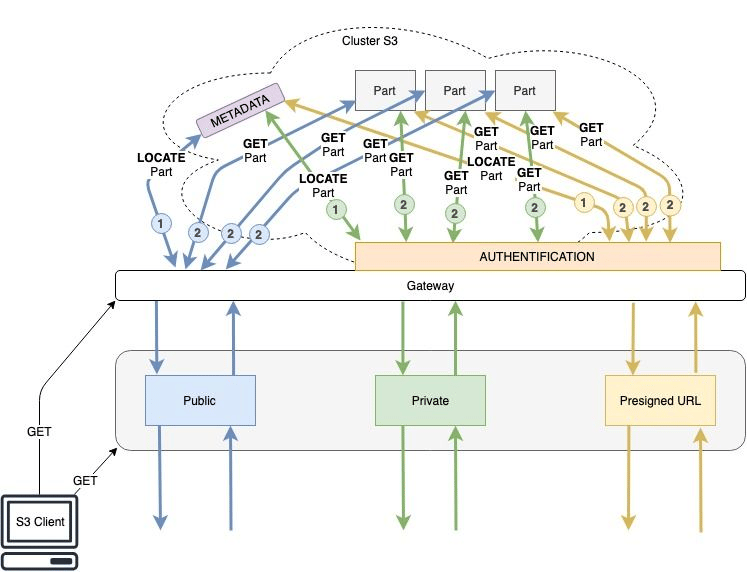

When a GET request comes to our object storage entry point. The Object Storage determines what kind of object the user is trying to get.

There are three posibilities: public, presigned, private.

When you request access to your data, we must first recover all the parts that constitute your object.

To do this, we query the internal metadata associated with your object.

Once all this data is identified, we will query the machines that store this data.

We then need to rebuild your file to start your download.

If part of your file is unavailable, ereasure coding rebuilds it on the fly.

An object using a presigned url is exposed through a link for a duration you choose.

When a request comes for this type of object, we first check the signature contained in the url of the file.

Once the signature is compliant we deliver the object like it is done for a public object

A private object requires that you are authenticated and authorized to access it. When a request to a private object is received, object-storage checks signature and authentication information.

Once the signature and authentication check out, we deliver the object like it is done for a public object.

Deletion of an object is performed immediatly. The object storage system will delete all the parts of the object you want to delete. The metadata related to this object are also deleted immediatly.

We call scrubbing the process that verifies the integrity of the data to guarantee its durability. This process is performed regularly on the platform. It can also be called on demand, for instance, during the maintainance of hardware equipments.

Scrubbing can be performed in two ways:

We also use the rebalancing process to ensure that parts created by scrubbing are spread on several nodes.

In this series of articles, we will start with a wide description of the Object Storage technology currently in production at Scaleway.

In this article, we will go through the infrastructure design on which our object storage service runs. The first challenge was to find the right balance between the network, CPUs and IOPS.

We built a whole system on top of Redis to facilitate the setup of a secure cache. The result? A lighter load for your primary database and a secure and smooth automated caching service.