AI in practice: Generating video subtitles

In this practical example, we roll up our sleeves and put Scaleway's H100 Instances to use by leveraging a couple of open source ML models to optimize our internal communication workflows.

This a guest post by Zofia Smoleń, Founder of Polish startup MindMatch, a member of Scaleway's Startup Program 🚀

One of the greatest developments of recent years was making computers speak our language. Scientists have been working on language models (which are basically models predicting next sequence of letters) for some time already, but only recently they came up with models that actually work - Large Language Models (LLMs). The biggest issue with them is that they are… Large.

LLMs have billions of parameters. In order to run them, you have to own quite a lot of computer power and use a significant amount of energy. For instance, OpenAI spends $700 000 daily on ChatGPT, and their model is highly optimized. For the rest of us, this kind of spending is neither good for your wallet, nor for the climate.

So in order to limit your spending and carbon footprint, you cannot just use whatever OpenAI or even Hugging Face provides. You have to dedicate some time and thought to come up with more frugal methods of getting the job done. That is exactly what [Scaleway Startup Program member] MindMatch has been doing lately.

MindMatch is providing a place where Polish patients can seek mental help from specialists. Using an open-source LLM from Hugging Face, MindMatch recognizes their patients’ precise needs based on a description of their feelings. With that knowledge, MindMatch can find the right therapy for their patients. It is a Polish-only website, but you can type in English (or any other language) and the chatbot (here) will understand you and give you its recommendation. In this article, we wrap their thoughts on dealing with speed and memory problems in production.

What do you need to do exactly? Do you need to reply to messages in a human-like manner? Or do you just need to classify your text? Is it only topic extraction?

Read your bibliography. Check how people approached your task. Obviously, start from the latest papers, because in AI (and especially Natural Language Processing), all the work becomes obsolete and outdated very quickly. But… taking a quick look at what people did before Transformers (the state-of-the-art model architecture behind ChatGPT) can do no harm. Moreover, you may find solutions that resolve your task almost as well as any modern model would (if your task is comparatively easy) and are simpler, faster and lighter.

You could start by simply looking at articles on Towards data science, but we also encourage you to browse through Google Scholar. A lot of work in data science is documented only in research papers so it actually makes sense to read them (as opposed to papers in social science).

Why does this matter? You don’t need a costly ChatGPT-like solution just to tell you whether your patient is talking about depression or anxiety. Defining your needs and scouring the internet in search of all solutions applied so far might give you a better view on your options, and help select those that make sense in terms of performance and model size.

This is probably the most obvious step for all developers, but make sure that you store all the models, classes and functions (and obviously constants - for example labels that you want to classify) in a way that allows you to quickly iterate, without needing to dig deep into code. This will make it easier for you, but also for all non-technical people that will want to understand and work on the model.

What worked well for MindMatch was even storing all the dictionaries in an external database that was modifiable via Content Management Systems. One of those dictionaries was a list of classes used by the model. This way non-technical people were able to test the model. Obviously, to reduce the database costs, MindMatch had to make sure that they only pull those classes when necessary.

Also, the right documentation will make it easier for you to use MLOps tools such as Mlflow. Even if it is just a prototype yet, it is better for you to prepare for the bright future of your product and further iterations.

There is a lot of information and guidance about how to set the directory so that it is neat and tidy. Browse Medium and other portals until you find enough inspiration for your purpose.

Now you’ve defined your needs, it’s time to choose the right solution. Since you want to use LLMs, you will most likely not even think about training your own model from scratch (unless you are a multi-billion company or a unicorn startup with high aspirations). So your options are limited to pre-trained models.

For the pre-trained models, there are basically two options. You can either call them through an API and get results generated on an external computer instance (what OpenAI offers), or you can install the model on your computer and run it there as well (that is what Hugging Face offers, for example).

The first option is usually more expensive, but that makes sense - you are using the computer power of another company, and it should come with a price. This way, you don’t have to worry about scalability. Usually, proprietary models like OpenAI’s work like that, so on top of that you also pay a fee for just using the model. But some companies producing open source models, like Mistral, also provide APIs.

The second option (installing the model on your computer) comes only with open source models. So you don’t pay for the model itself, but you have to run it on your computer. This option is often chosen by companies who don’t want to be dependent on proprietary models and prefer to have more control over their solution. It comes with a cost: that of storage and computing power. It is pretty rare for organizations to own physical instances with memory sufficient for running LLM models, so most companies (like MindMatch) choose to use cloud services for that purpose.

The choice between proprietary and open-source models depends on various factors, including the specific needs of the project, budget constraints, desired level of control and customization, and the importance of transparency and community support. In many cases it also depends on the level of domain knowledge within the organization. Proprietary models are usually easier to deploy.

The simpler the better. You should look for models that exactly match your needs. Assuming that you defined your needs already and did your research on Google Scholar, you should already know what solutions you are looking for. What now, then? Chances are, there are already at least a dozen of models that can solve your problem.

We strongly advise you to have a look at Hugging Face’s “Models” section. Choose the model type; and then, starting from the most popular (it usually makes the most sense), try those models on your data. Pay particular attention to the accuracy and size of the model. The smaller the model is, the cheaper it is. As for accuracy, remember that your data is different from what the model was trained on. So if you want to use your solution for medical applications, you might want to try models that were trained on medical data.

Also, remember that the pre-trained models are just language models. They don’t have any specialist knowledge. In fact, they rarely see any domain-specific words in training data. So don’t expect the model to talk easily about Euphyllophytes plants without any additional fine-tuning, Retrieval Augmented Generation (RAG) or at least prompt engineering. Any of those augmentations come with higher computing power cost.

So you need to be smart about what exactly you make your model do. For example, when MindMatch tried to use zero-shot classification to recognize ADHD (a phrase rarely seen in training datasets), they decided to make it recognize Hyperactivity instead. Hyperactivity being a more frequent keyword that could easily act as a proxy for ADHD, allowed MindMatch to improve accuracy without deteriorating speed.

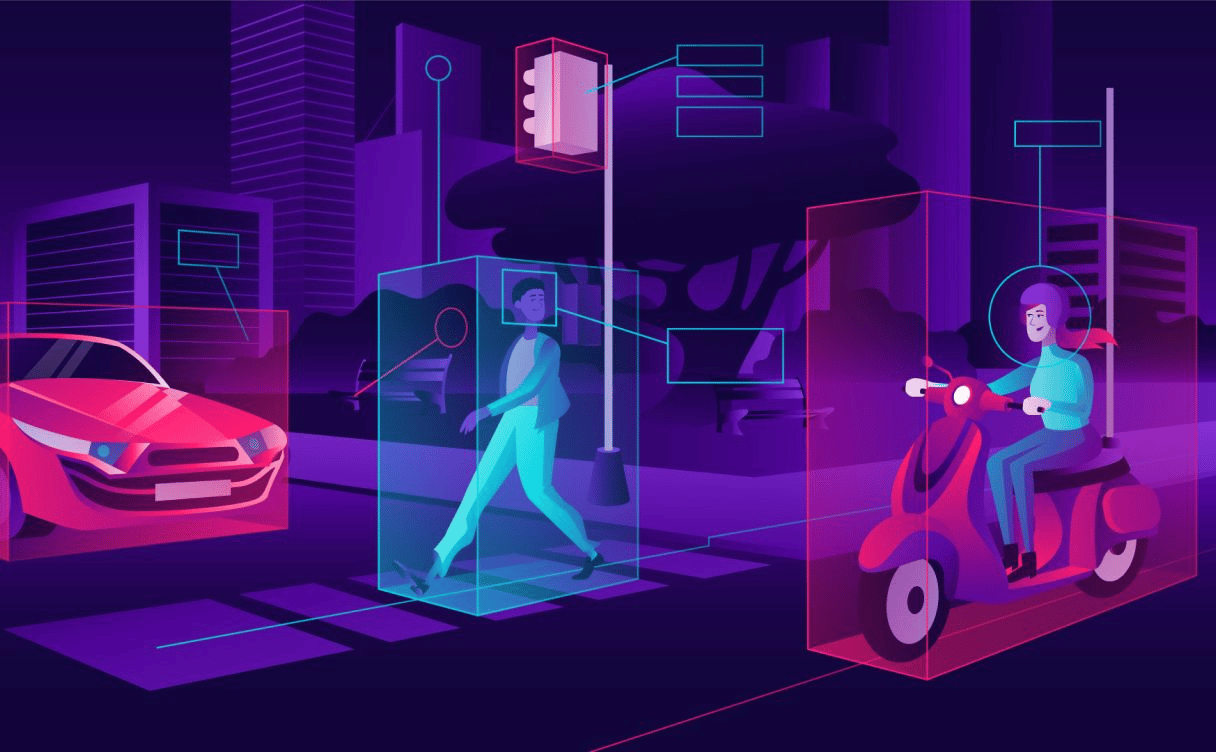

GPU or CPU? Many would assume that the answer lies simply between the speed and the price, as GPUs are generally more expensive and faster. That is usually true, but not always. Here are a few things to consider.

Large and complex models, like GPT-4, benefit significantly from the processing power of GPUs, especially for tasks like training or running multiple instances simultaneously. GPUs have many more computing cores than CPUs, making them adept at parallel processing. This is particularly useful for the matrix and vector computations common in deep learning.

But in order to start up GPU processing data must be transferred from RAM to GPU memory (GRAM), which can be costly. If the data is large and amenable to parallel processing, this overhead is offset by faster processing on the GPU.

GPUs may not perform as well with tasks that require sequential processing, such as those involving Recurrent Neural Networks (RNNs) or Long Short-Term Memory (LSTM) networks (this applies to some implementations of Natural Language Processing). The sequential computation in LSTM layers, for instance, doesn't align well with the GPU's parallel processing capabilities, leading to underutilization (10% - 20% GPU load).

Despite their limitations in sequential computation, GPUs can be highly effective during the backpropagation phase of LSTM, where derivative computations can be parallelized, leading to higher GPU utilization (around 80%).

For training large models, GPUs are almost essential due to their speed and efficiency (not in all cases, as mentioned above). However, for inference (especially with smaller models or less frequent requests), CPUs can be sufficient and more cost-effective. If you are using a pre-trained model (you most probably are), you only care about inference, so don’t assume that GPU will be better - compare it with CPUs.

If you need to scale up your operations (e.g., serving a large number of requests simultaneously), GPUs offer better scalability options compared to CPUs.

GPUs are more expensive and consume more power. If budget and resources are limited, starting with CPUs and then scaling up to GPUs as needed can be a practical approach.

Are all of the above obvious to you? Here are other techniques (that often require you to dig a little deeper) that allow for optimized runtime and memory.

Quantization is a technique used to optimize Large Language Models (LLMs) by reducing the precision of the model’s weights and activations. Typically, LLMs use 32 or 16 bits for each parameter, consuming significant memory. Quantization aims to represent these values with fewer bits, often as low as eight bits, without greatly sacrificing performance.

The process involves two key steps: rounding and clipping. Rounding adjusts the values to fit into the lower bit format, while clipping manages the range of values to prevent extremes. This reduction in precision and range enables the model to operate in a more compact format, saving memory space.

By quantizing a model, several benefits arise:

However, quantizing LLMs comes with challenges:

Pruning involves identifying and removing parameters in a model that are either negligible or redundant. One common method of pruning is sparsity, where values close to zero are set to zero, leading to a more condensed matrix representation that only includes non-zero values and their indices. This approach reduces the overall space occupied by the matrix compared to a fully populated, dense matrix.

Pruning can be categorized into two types:

SparseGPT, specifically, adopts a one-shot pruning strategy that bypasses the need for retraining. It approaches pruning as a sparse regression task, using an approximate solver that seeks a sufficiently good solution rather than an exact one. This approach significantly enhances the efficiency of SparseGPT.

In practice, SparseGPT has been successful in achieving high levels of unstructured sparsity in large GPT models, such as OPT-175B and BLOOM-176B. It can attain over 60% sparsity - a higher rate than what is typically achieved with structured pruning - with only a minimal increase in perplexity, which measures the model's predictive accuracy.

Distillation is a method of transferring knowledge from a larger model (teacher) to a smaller one (student). This is done by training the student model to mimic the teacher’s behavior, focusing on matching either the final layer outputs (logits) or intermediate layer activations. An example of this is DistilBERT, which retains most of BERT's capabilities but at a reduced size and increased speed. Distillation is especially useful when training data is scarce.

However, be careful if you want to distill a model! Many state-of-the-art LLMs have restrictive licenses that prohibit using their outputs to train other LLMs. It is usually ok though, to use open-source models to train other LLMs.

Model serving techniques aim to maximize the use of memory bandwidth during model execution. Key strategies include:

There are many ways to optimize model performance, leading not only to lower costs but also to less waste and lower carbon footprint. Start from a high-level definition of your needs, test different solutions and then dig into details, reducing the cost even further. MindMatch still is testing different options of reaching satisfying accuracy with lower computational costs - it is a never ending process.

In this practical example, we roll up our sleeves and put Scaleway's H100 Instances to use by leveraging a couple of open source ML models to optimize our internal communication workflows.

Do generative AI's benefits for the planet outweigh its impacts? Let's try to find out...

What do you need to know before getting started with state-of-the-art AI hardware like NVIDIA's H100 PCIe 5, or even Scaleway's Jeroboam or Nabuchodonosor supercomputers? Look no further...