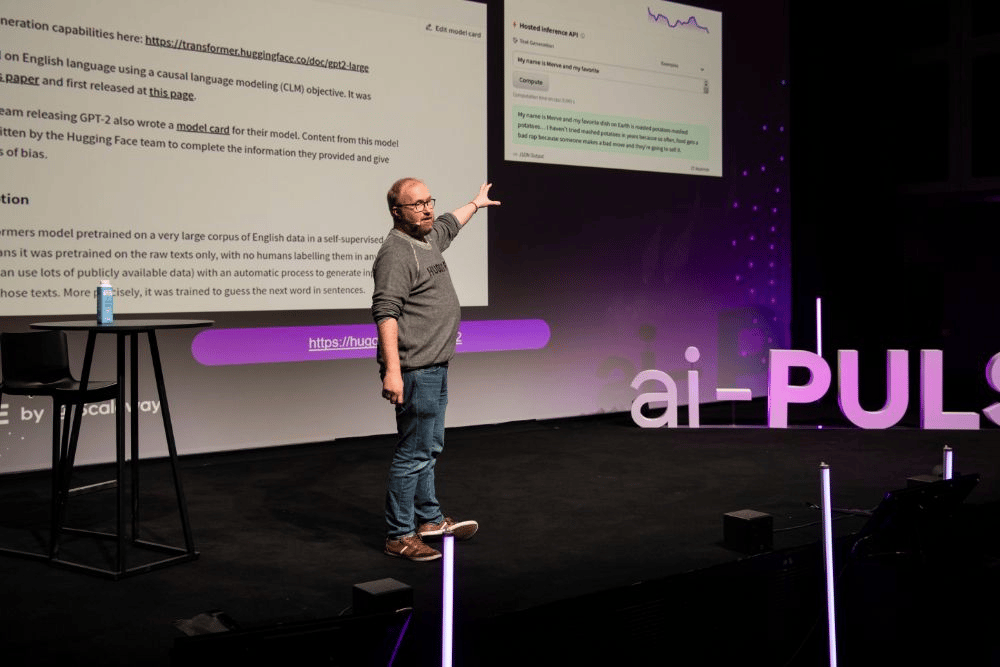

"We're currently working on Scaleway SuperPod, which is performing exceptionally well.", Arthur Mensch on the Master Stage at ai-PULSE 2023. Mistral AI, used Nabu to build its Mixtral model , a highly efficient mixture-of-experts model. At its release, Mixtral outperformed existing closed and open weight models across most benchmarks, offering superior performance with fewer active parameters, making it a major innovation in the field of AI. The collaboration with Scaleway enabled Mistral to scale its training efficiently, allowing Mixtral to achieve groundbreaking results in record time.