Increased availability

Load Balancer is the easiest way to build a resilient platform thanks to a highly available architecture. All backend servers are monitored to ensure that traffic is distributed among healthy resources.

Improve the performance of your services as you grow.

Load Balancer is the easiest way to build a resilient platform thanks to a highly available architecture. All backend servers are monitored to ensure that traffic is distributed among healthy resources.

Avoid dips in performance by adding as many backend servers as necessary. Increase your processing capacity in a few clicks from the Scaleway console or configure automatic scaling using the API.

As your business grows, you need more resources to succeed. With Load Balancer, you can easily scale your business by adding new backend servers to improve your quality of service without any downtime.

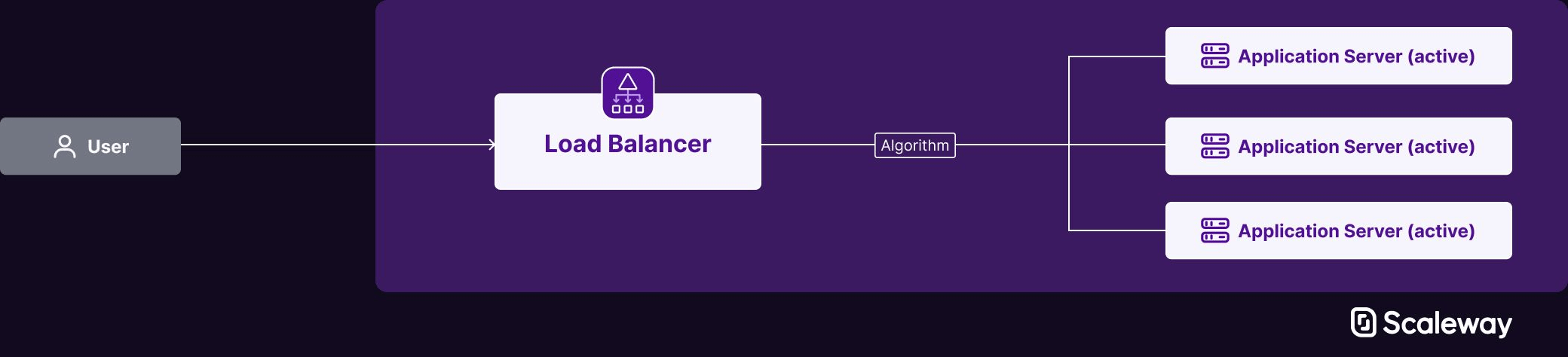

Distribute workloads across multiple servers during peaks in traffic to your website using Load Balancer to ensure continued availability and avoid servers being overloaded.

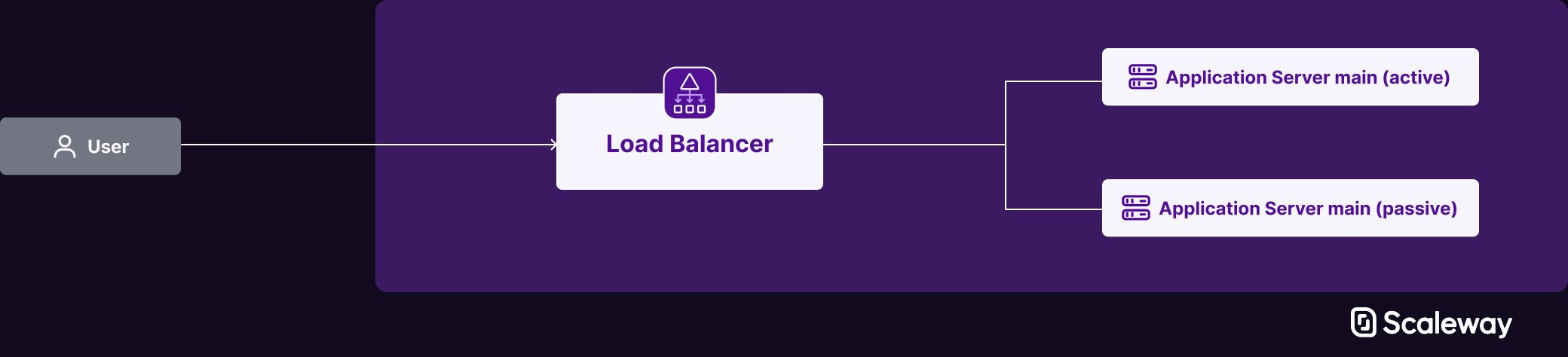

If the main server is faulty or slowed down, load balancers can automatically redirect traffic to a healthy secondary server, so there is no disruption to service for users.

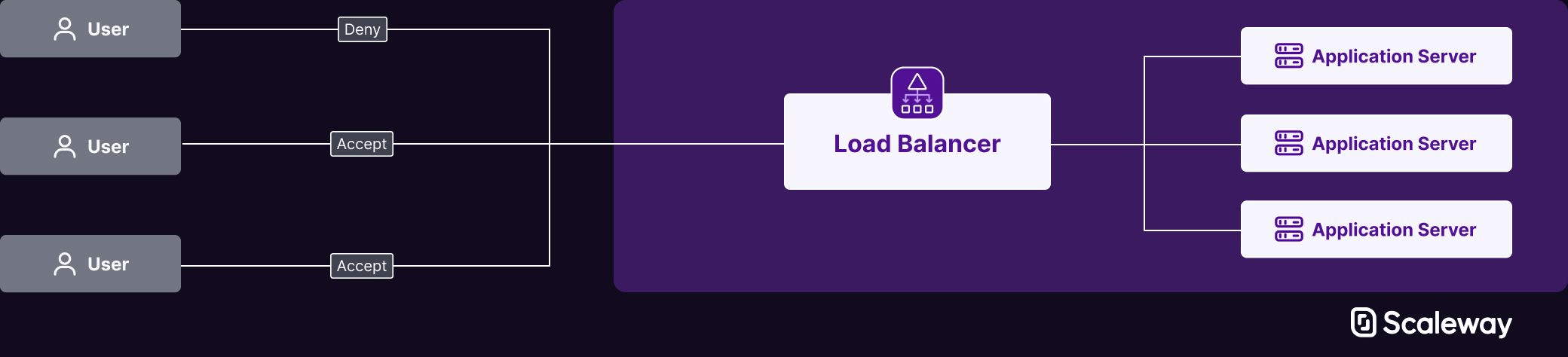

oad balancers bring greater security and network performance to your websites/applications by allowing you to create ACLs so you can authorize legitimate connections only, for example to Kubernetes pods. It enables you to offer your customers a higher level of service and improve their experience.

Bandwidth

Up to 4Gbps

Multi-cloud compatibility

On LB-GP-L & LB-GP-XL offers

Health checks

HTTP(S), MySQL, PgSQL, LDAP, REDIS, TCP

Balancing algorithms

Round-robin, least connection, first available

Backend servers

Unlimited - Public or within a Private Network

Redundancy

High availability

Traffic Encryption

SSL/TLS Bridging, Passthrough, and Offloading

HTTPS

Let’s Encrypt & custom SSL certificates

Whether you are distributing your workload between web servers, databases, or other TCP services, you can easily set up health checks to ensure the availability of your backend servers. You can even monitor their availability in real time. If one of them fails to respond, its traffic is automatically redirected until the problem is solved.

Use our Load Balancer to regulate traffic according to your use cases. Round-robin, sticky connections, least connection or first healthy rules are good examples of what is possible.

Add as many backend servers as you want to our Load Balancer and scale your infrastructure on the fly, without any limits, and distribute your traffic across multiple platforms with the multi-cloud offer or inside a VPC on a Private Network.

Filter the IP addresses that are allowed to request your servers. Disable unwanted visitors to keep them from connecting to your network bandwidth, thus increasing security.

Some offers allow you to distribute your traffic between different platforms or any on-premise server or Instance. This allows you to build a more robust infrastructure and avoids depending on a single platform.

Use our Load Balancer to expose your containers and pods to the internet, so they have a common DNS and IP address, and to balance workloads.

With a sizable bandwidth offer, there’s no use case we don’t support. And, as we do not charge for egress, you will be billed a fixed price with no surprises.

You can configure your Load Balancer’s backend, and choose the protocol (HTTP, HTTPs, HTTP/2, HTTP/3 or TCP) used to send and receive data.

Improve network speed by passing SSL/TLS-encrypted data through your Load Balancer without decrypting it, accelerating backend request processing as well as communication between servers and end users.

You can also use Load Balancer as a bridge to decrypt incoming encrypted traffic at the frontend and re-encrypt traffic before forwarding it to backend servers, thus ensuring total end-to-end security.

Load Balancers are highly available and fully managed Instances which distribute workloads among your servers. They ensure application scaling while delivering their continuous availability. They are commonly used to improve the performance and reliability of websites, applications, databases, and other services by distributing workloads across multiple servers.

It monitors the availability of your backend servers, detects if a server fails and rebalances the load between the rest of the servers, making your applications highly available for users.

The multi cloud is an environment in which multiple cloud providers are used simultaneously. With our multi-cloud offers, you can add multiple backend servers besides Instances, Elastic Metal and Dedibox servers.

These can be services from other cloud platforms such as Amazon Web Services, Digital Ocean, Google Cloud, Microsoft Azure or OVHcloud, but also on-premise servers hosted in a third-party datacenter.

All protocols based on TCP are supported. It includes database, HTTP, LDAP, IMAP and so on. You can also specify HTTP to benefit from support and features that are exclusive to this protocol.

Yes, you can restrict the use of a TCP port or HTTP URL via ACLS. Find more information here.

Yes, Load Balancer supports both IPv4 and IPv6 addresses at the frontend. IPv6 can also be used to communicate between the Load Balancer and your backend servers.

No, it’s not required. You can use private Scaleway IPs on your backend servers if they are hosted in the same Availability Zone (AZ) as the Load Balancer.