How to use your Managed Inference deployment with a Private Network

In this tutorial, we guide you through the process of attaching a Private Network to your Managed Inference deployment. This can be done during the initial setup or added later to an existing deployment. Using a Private Network for communications between your Instances hosting your applications and the Managed Inference deployment ensures secure communication between resources with low latency.

Before you start

To complete the actions presented below, you must have:

- A Scaleway account logged into the console

- Owner status or IAM permissions allowing you to perform actions in the intended Organization

- A Managed Inference deployment

How to attach a Private Network to a Managed Inference deployment

Attaching a Private Network during deployment setup

- Click Managed Inference in the AI section of the Scaleway console side menu. A list of your deployments displays.

- From the drop-down menu, select the geographical region you want to manage.

- Navigate to the Deployments section and click Create New Deployment. The setup wizard displays.

- During the setup process, you access the Networking section.

- You will be asked to attach a Private Network. Two options are available:

- Attach an existing Private Network: Select from the list of available networks.

- Add a new Private Network: Choose this option if you need to create a new network.

- Confirm your selection and complete the deployment setup process.

Attaching a Private Network to an existing deployment

- Click Managed Inference in the AI section of the Scaleway console side menu. A list of your deployments displays.

- From the drop-down menu, select the geographical region you want to manage.

- Click a deployment name or more icon > More info to access the deployment dashboard.

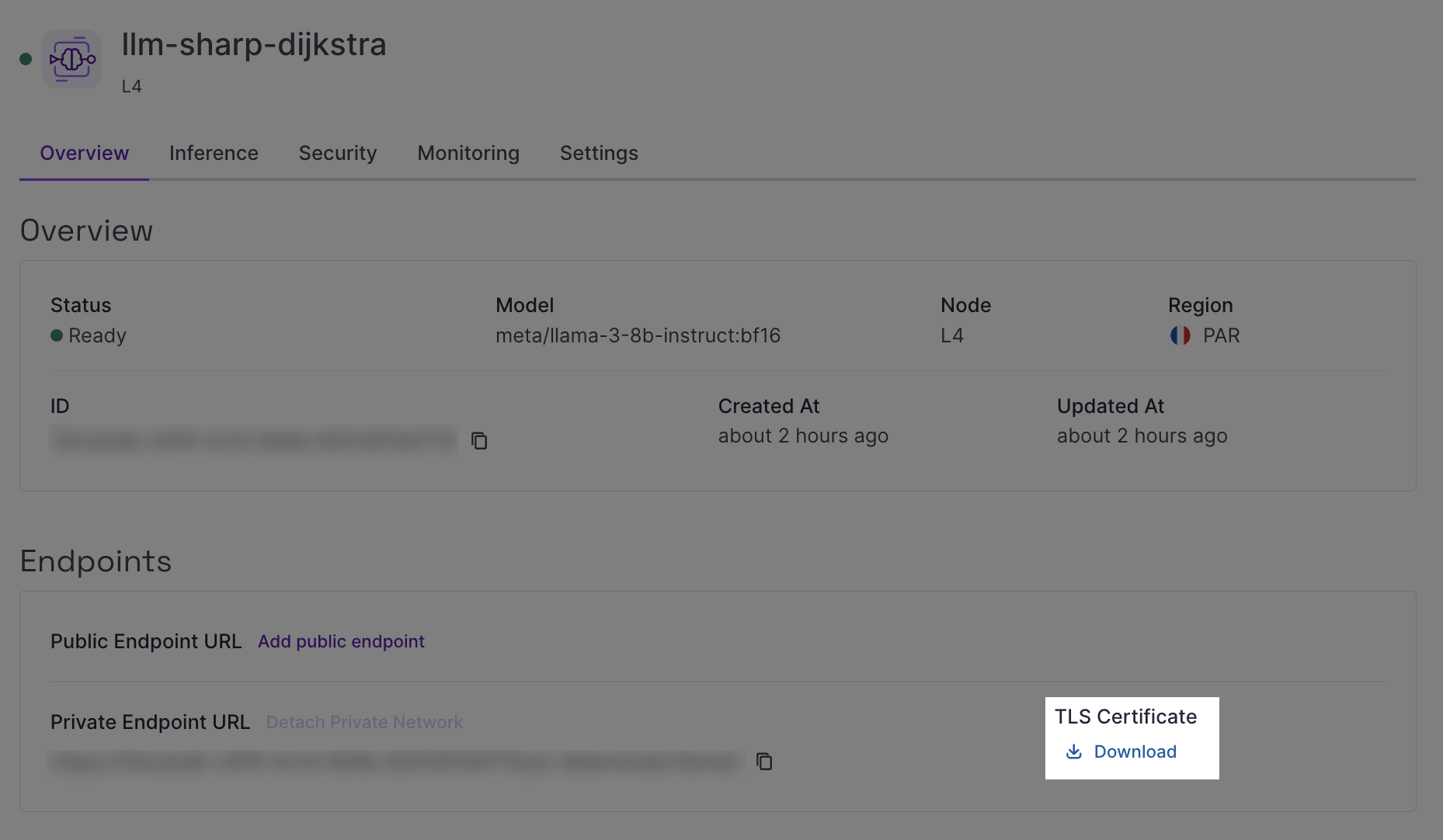

- Go to the Overview tab and locate the Endpoints section.

- Click Attach Private Network. Two options are available:

- Attach an existing Private Network: Select from the list of available networks.

- Add a new Private Network: Choose this option if you need to create a new network.

- Save your changes to apply the new network configuration.

Verifying the Private Network connection

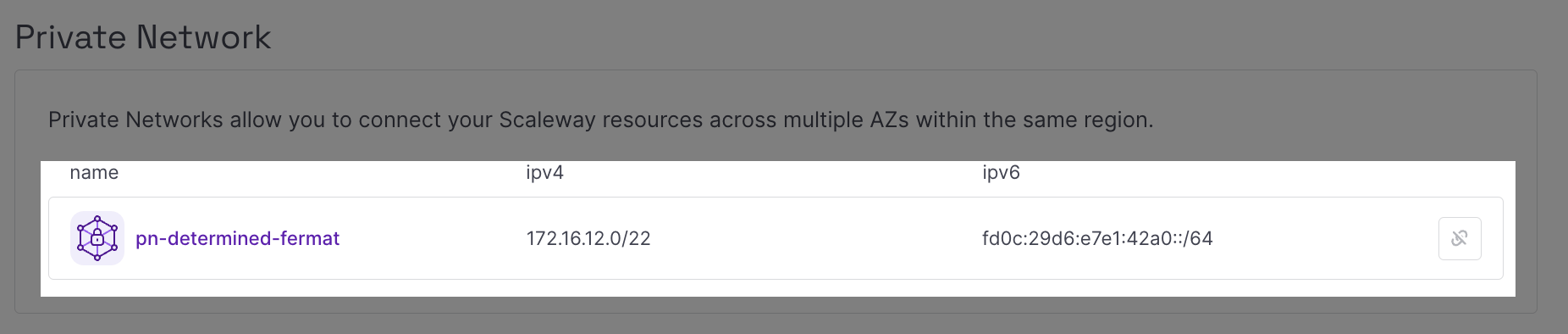

- After attaching a Private Network, go to the Security tab.

- You should see the Private Network connected to the deployment resource and its allocated IPs listed.

How to send inference requests in a Private Network

-

Create an Instance which will host the inference application.

-

Download the TLS certificate from your Managed Inference deployment, available from the Overview tab in the Endpoints section.

-

Transfer the TLS certificate to the Instance using the

scp(secure copy) command to securely transfer the certificate from your local machine to the Scaleway Instance.- Example command:

scp -i ~/.ssh/id_ed25519 /home/user/certs/cert_file.pem root@51.115.xxx.yyy:/root - Replace placeholders in the command above as follows:

-i ~/.ssh/id_ed25519: Path to your private SSH key./home/user/certs/cert_file.pem: Path to the certificate file on your local machine.root: Your Scaleway Instance username (rootfor default configuration).51.115.xxx.yyy: Public IP address of your Scaleway Instance.:/root: Destination directory on the Scaleway Instance.

- Example command:

-

Connect to your Instance using SSH.

-

Open a text editor and create a file named

inference.pyusing the following command:nano inference.py -

Paste the following Python code sample into your

inference.pyfile:import requests PAYLOAD = { "model": "<MODEL_DEPLOYED>", # EXAMPLE= meta/llama-3.1-8b-instruct:fp8 "messages": [ {"role": "system", "content": "You are a helpful, respectful and honest assistant."}, {"role": "user", "content": "How can I use large language models to improve customer service?"} ], "max_tokens": 500, "temperature": 0.7, "stream": False } headers = {"Authorization": "Bearer " + "<SCW_SECRET_KEY>"} # ADD IAM KEY IF NECESSARY response = requests.post("<PRIVATE_ENDPOINT_URL>/v1/chat/completions", headers=headers, json=PAYLOAD, stream=False, verify='<CERT_NAME>.pem') if response.status_code == requests.codes.ok: # EXTRACT RESPONSE DATA data = response.json() content = data['choices'][0]['message']['content'] print(content) else: print("Error occurred:", response.text)Edit the script as follows:

- PAYLOAD: Update the model name and inference parameters.

- headers: Add your IAM secret key if IAM authentication is enabled.

- response: Update with your private endpoint URL and certificate file path.

-

Save your changes using

CONTROL+O, then exit withCONTROL+X. -

Make your script executable using the following command:

chmod +x inference.py -

Run the script:

python3 inference.py

Detaching a Private Network from a Managed Inference deployment

- Click Managed Inference in the AI section of the Scaleway console side menu. A list of your deployments displays.

- Click a deployment name or more icon > More info to access the deployment dashboard.

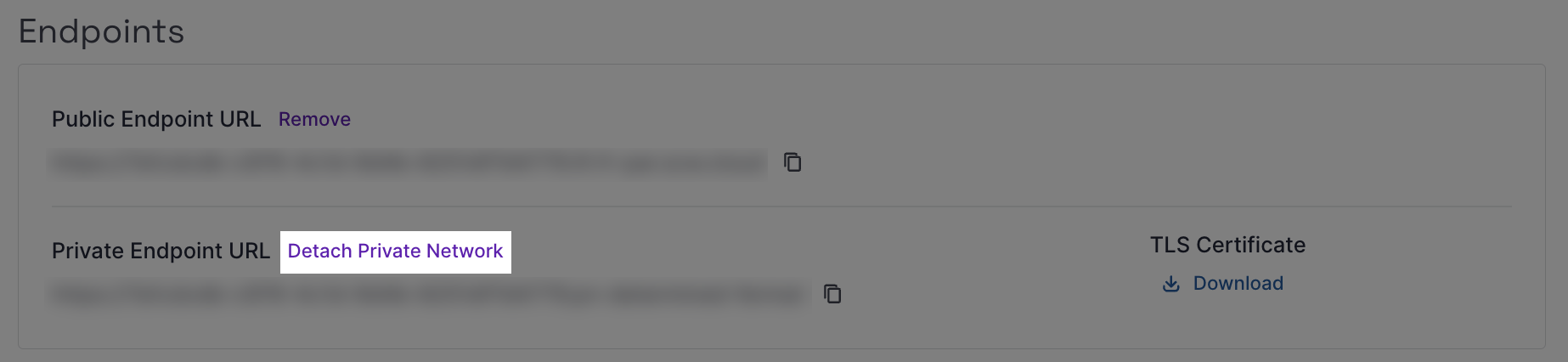

- Go to the Overview tab and locate the Endpoints section.

- Click Detach Private Network. A pop-up displays.

- Click Detach Private Network to confirm the removal of the private endpoint for your deployment.