Ansible Playbook for Apache installation step-by-step guide

Apache is one of the most used open-source web servers globally. This tutorial explains how to deploy and configure Apache on Scaleway Instances with the power of Ansible automation. Before diving in, ensure you meet the prerequisites:

Before you start

To complete the actions presented below, you must have:

- Owner status or IAM permissions allowing you to perform actions in the intended Organization

- Familiarity with Ansible for seamless execution

Configuring Ansible for Apache

Begin by setting up Ansible to communicate with your Scaleway Instances:

- Create a dedicated directory:

mkdir ansible-apache - Navigate to the directory:

cd ~/ansible-apache/ - Create an

ansible.cfgfile:nano ansible.cfg - Populate it with the host file configuration:

[defaults] hostfile = hosts - Create and edit a

hostsfile:nano hosts - Define your host information:

[apache] secondary_server_ip ansible_ssh_user=username

Creating a playbook

Utilize Ansible playbooks for configuration and deployment:

- Craft a playbook named

apache.yml:nano apache.yml - Insert the following content:

- hosts: apache tasks: - name: run echo command command: /bin/echo hello world - Execute the playbook:

ansible-playbook apache.yml

Installing Apache

Install Apache on your Scaleway Instance:

- Update

apache.ymlwith the apt module:nano apache.yml - Replace the existing content with:

--- - hosts: apache become: yes tasks: - name: install apache2 apt: name: apache2 update_cache: yes state: latest - Run the playbook:

ansible-playbook apache.yml --ask-become-pass

Configuring Apache modules

Enable Apache modules as needed:

- Open

apache.yml:nano apache.yml - Include module activation and restart handler:

--- - hosts: apache become: yes tasks: - name: install apache2 apt: name: apache2 update_cache: yes state: latest - name: enable mod_rewrite apache2_module: name: rewrite state: present notify: - restart apache2 handlers: - name: restart apache2 service: name: apache2 state: restarted - Execute the playbook:

ansible-playbook apache.yml --ask-become-pass

Configuring Apache options

Customize Apache configuration as per your requirements:

- Open

apache.ymlfor editing:nano apache.yml - Adjust port settings:

--- - hosts: apache become: yes tasks: - name: install apache2 apt: name: apache2 update_cache: yes state: latest - name: enable mod_rewrite apache2_module: name: rewrite state: present notify: - restart apache2 - name: apache2 listen on port 8081 lineinfile: dest: /etc/apache2/ports.conf regexp: "^Listen 80" line: "Listen 8081" notify: - restart apache2 - name: apache2 virtualhost on port 8081 lineinfile: dest: /etc/apache2/sites-available/000-default.conf regexp: "^<VirtualHost \*:80>" line: "<VirtualHost *:8081>" notify: - restart apache2 handlers: - name: restart apache2 service: name: apache2 state: restarted - Run the playbook:

ansible-playbook apache.yml --ask-become-pass

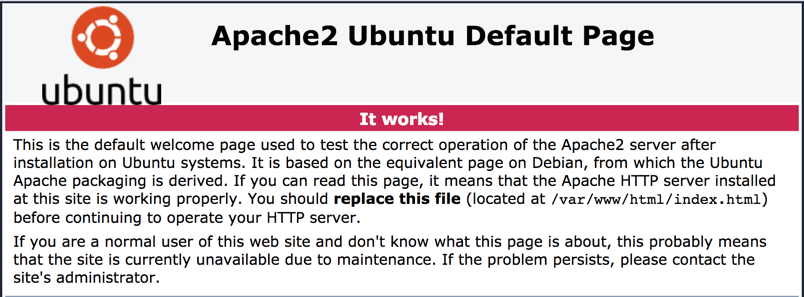

Once Ansible is fully set up, you can visit your web browser on port 8081 (rather than port 80). In most web browsers, this can be easily achieved by adding :port onto the end of the URL: http://public_ip_address:8081/.

Visit our Help Center and find the answers to your most frequent questions.

Visit Help Center